How to Write a Jupyter Notebook Extension

Document worth reading: “A Short Introduction to Local Graph Clustering Methods and Software”

Graph clustering has many important applications in computing, but due to the increasing sizes of graphs, even traditionally fast clustering methods can be computationally expensive for real-world graphs of interest. Scalability problems led to the development of local graph clustering algorithms that come with a variety of theoretical guarantees. Rather than return a global clustering of the entire graph, local clustering algorithms return a single cluster around a given seed node or set of seed nodes. These algorithms improve scalability because they use time and memory resources that depend only on the size of the cluster returned, instead of the size of the input graph. Indeed, for many of them, their running time grows linearly with the size of the output. In addition to scalability arguments, local graph clustering algorithms have proven to be very useful for identifying and interpreting small-scale and meso-scale structure in large-scale graphs. As opposed to heuristic operational procedures, this class of algorithms comes with strong algorithmic and statistical theory. These include statistical guarantees that prove they have implicit regularization properties. One of the challenges with the existing literature on these approaches is that they are published in a wide variety of areas, including theoretical computer science, statistics, data science, and mathematics. This has made it difficult to relate the various algorithms and ideas together into a cohesive whole. We have recently been working on unifying these diverse perspectives through the lens of optimization as well as providing software to perform these computations in a cohesive fashion. In this note, we provide a brief introduction to local graph clustering, we provide some representative examples of our perspective, and we introduce our software named Local Graph Clustering (LGC). A Short Introduction to Local Graph Clustering Methods and Software

Failure Pressure Prediction Using Machine Learning

- Regression Models

Reflections on the 10th anniversary of the Revolutions blog

On December 9 2008, very nearly ten years ago, the first post on Revolutions was published. Way back then, this blog was part of a young startup called Revolution Computing, which later became Revolution Analytics. (That name persists to this day in the URL of this blog.) The idea at that time was to introduce the world to a wonderful but little-known statistics environment called R, by sharing news and applications of R to the broader data analysis community, along with tips and tricks for those that had already discovered R.

Should you become a data scientist?

By Sarah Nooravi, MobilityWare, LinkedIn Top Voice in Data Science and Analytics

covrpage, more information on unit testing

In this post, we shall explore the first R package that received Locke Data’s new support, covrpage by Jonathan Sidi! With this nifty package you can better communicate the unit testing completeness and goodness of your package!

Whats new on arXiv

Anomaly Detection for Network Connection Logs

5½ Reasons to Ditch Spreadsheets for Data Science: Code is Poetry

The post 5½ Reasons to Ditch Spreadsheets for Data Science: Code is Poetry appeared first on The Lucid Manager.

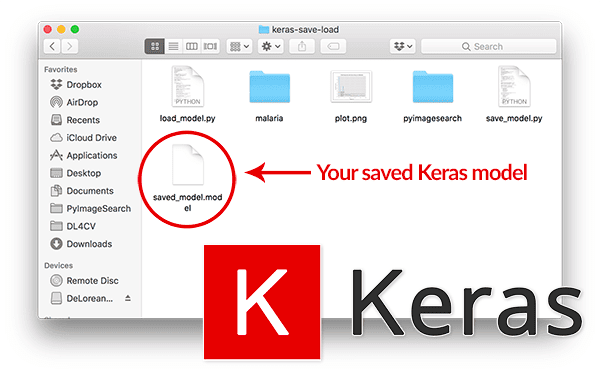

Keras – Save and Load Your Deep Learning Models

R Packages worth a look

Persistence Terrace for Topological Data Analysis (pterrace)Plot the summary graphic called the persistence terrace for topological inference. It also provides the aid tool called the terrace area plot for deter …