Data Science is a broad field so as its team but the question is why you want to build a data science team?Data Science has become the top growing job and its Entry-level salaries can range into six figures, and can lead to more job opportunities by 2020. We are going to mention main important aspects of a data science organization.

A few upcoming R conferences

Here are some conferences focused on R taking place in the next few months:

University of Nebraska at Omaha: Faculty Position in Computer Science [Omaha, NE]

At: University of Nebraska at Omaha Location: Omaha, NEWeb: www.unomaha.eduPosition: Faculty Position in Computer Science

Location: Omaha, NEWeb: www.unomaha.eduPosition: Faculty Position in Computer Science

Proof that 1/7 is a repeated decimal

This blog post serves as an exercise and solution to the following question:

Challenges & Solutions for Production Recommendation Systems

Introduction

Online Master’s in Applied Data Science From Syracuse

Earn Your Master’s in Applied

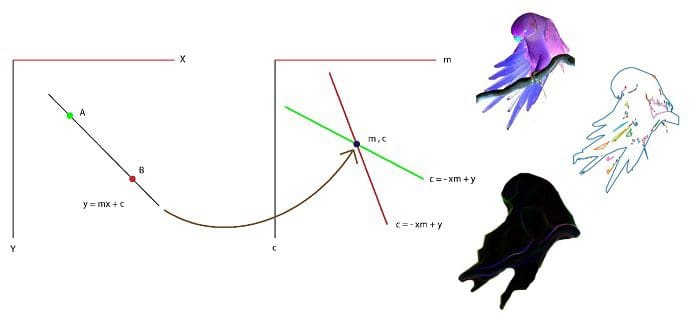

Basic Image Data Analysis Using Python – Part 4

If you did not already know

Multi-Context Label Embedding (MCLE)  Label embedding plays an important role in zero-shot learning. Side information such as attributes, semantic text representations, and label hierarchy are commonly used as the label embedding in zero-shot classification tasks. However, the label embedding used in former works considers either only one single context of the label, or multiple contexts without dependency. Therefore, different contexts of the label may not be well aligned in the embedding space to preserve the relatedness between labels, which will result in poor interpretability of the label embedding. In this paper, we propose a Multi-Context Label Embedding (MCLE) approach to incorporate multiple label contexts, e.g., label hierarchy and attributes, within a unified matrix factorization framework. To be specific, we model each single context by a matrix factorization formula and introduce a shared variable to capture the dependency among different contexts. Furthermore, we enforce sparsity constraint on our multi-context framework to strengthen the interpretability of the learned label embedding. Extensive experiments on two real-world datasets demonstrate the superiority of our MCLE in label description and zero-shot image classification. …

Label embedding plays an important role in zero-shot learning. Side information such as attributes, semantic text representations, and label hierarchy are commonly used as the label embedding in zero-shot classification tasks. However, the label embedding used in former works considers either only one single context of the label, or multiple contexts without dependency. Therefore, different contexts of the label may not be well aligned in the embedding space to preserve the relatedness between labels, which will result in poor interpretability of the label embedding. In this paper, we propose a Multi-Context Label Embedding (MCLE) approach to incorporate multiple label contexts, e.g., label hierarchy and attributes, within a unified matrix factorization framework. To be specific, we model each single context by a matrix factorization formula and introduce a shared variable to capture the dependency among different contexts. Furthermore, we enforce sparsity constraint on our multi-context framework to strengthen the interpretability of the learned label embedding. Extensive experiments on two real-world datasets demonstrate the superiority of our MCLE in label description and zero-shot image classification. …

Document worth reading: “Detecting Dead Weights and Units in Neural Networks”

Deep Neural Networks are highly over-parameterized and the size of the neural networks can be reduced significantly after training without any decrease in performance. One can clearly see this phenomenon in a wide range of architectures trained for various problems. Weight/channel pruning, distillation, quantization, matrix factorization are some of the main methods one can use to remove the redundancy to come up with smaller and faster models. This work starts with a short informative chapter, where we motivate the pruning idea and provide the necessary notation. In the second chapter, we compare various saliency scores in the context of parameter pruning. Using the insights obtained from this comparison and stating the problems it brings we motivate why pruning units instead of the individual parameters might be a better idea. We propose some set of definitions to quantify and analyze units that don’t learn and create any useful information. We propose an efficient way for detecting dead units and use it to select which units to prune. We get 5x model size reduction through unit-wise pruning on MNIST. Detecting Dead Weights and Units in Neural Networks

Multithreaded in the Wild

Hello Stitch Fix followers, check out where our fellow Stitch Fixers are speaking this month.