I’ve recently added new bloggers to the site, leading to an influx of posts (142 new posts on the site, just last week). And while I love R, I do not have the time to read them all. Luckily, these posts are also published on R-bloggers twitter page, where over 62K followers see the new posts and decide if to give them a “like” or not. So I thought it might be helpful to sort all of last weeks posts (that got at least 15 likes), just to make sure I will not miss out on reading a particular popular post. I’m posting it here in the hopes that this would be helpful to others as well. Here is the list:

5 “Clean Code” Tips That Will Dramatically Improve Your Productivity

By George Seif, AI / Machine Learning Engineer

How AI Will Change Healthcare

Editor’s note: This piece was written in collaboration with the MAA Center

In Memoriam: Manfred te Grotenhuis

Manfred te Grotenhuis passed away. He was a respected sociologist, statistician, and teacher. I’ll leave it to others to comment on these achievements. To me, he was my teacher and mentor in statistics, and a dear colleague. Textbooks and other teachers have a lot to say about the theory of statistics, but it was Manfred who taught me the joy and intuition of doing statistics.

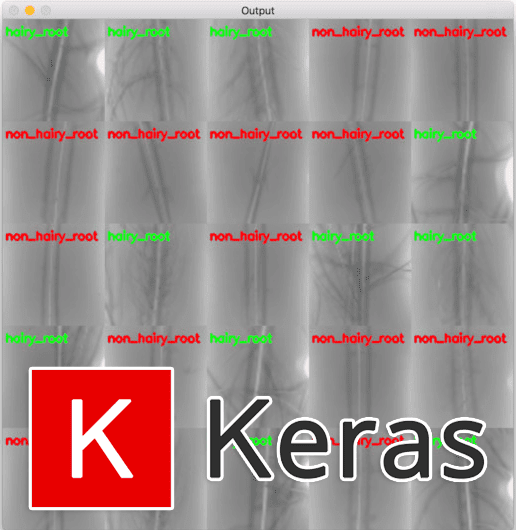

Deep learning, hydroponics, and medical marijuana

If you did not already know

StochasticNet  Deep neural networks is a branch in machine learning that has seen a meteoric rise in popularity due to its powerful abilities to represent and model high-level abstractions in highly complex data. One area in deep neural networks that is ripe for exploration is neural connectivity formation. A pivotal study on the brain tissue of rats found that synaptic formation for specific functional connectivity in neocortical neural microcircuits can be surprisingly well modeled and predicted as a random formation. Motivated by this intriguing finding, we introduce the concept of StochasticNet, where deep neural networks are formed via stochastic connectivity between neurons. Such stochastic synaptic formations in a deep neural network architecture can potentially allow for efficient utilization of neurons for performing specific tasks. To evaluate the feasibility of such a deep neural network architecture, we train a StochasticNet using three image datasets. Experimental results show that a StochasticNet can be formed that provides comparable accuracy and reduced overfitting when compared to conventional deep neural networks with more than two times the number of neural connections. …

Deep neural networks is a branch in machine learning that has seen a meteoric rise in popularity due to its powerful abilities to represent and model high-level abstractions in highly complex data. One area in deep neural networks that is ripe for exploration is neural connectivity formation. A pivotal study on the brain tissue of rats found that synaptic formation for specific functional connectivity in neocortical neural microcircuits can be surprisingly well modeled and predicted as a random formation. Motivated by this intriguing finding, we introduce the concept of StochasticNet, where deep neural networks are formed via stochastic connectivity between neurons. Such stochastic synaptic formations in a deep neural network architecture can potentially allow for efficient utilization of neurons for performing specific tasks. To evaluate the feasibility of such a deep neural network architecture, we train a StochasticNet using three image datasets. Experimental results show that a StochasticNet can be formed that provides comparable accuracy and reduced overfitting when compared to conventional deep neural networks with more than two times the number of neural connections. …

ABC intro for Astrophysics

Today I received in the mail a copy of the short book published by edp sciences after the courses we gave last year at the astrophysics summer school, in Autrans. Which contains a quick introduction to ABC extracted from my notes (which I still hope to turn into a book!). As well as a longer coverage of Bayesian foundations and computations by David Stenning and David van Dyk.

Today I received in the mail a copy of the short book published by edp sciences after the courses we gave last year at the astrophysics summer school, in Autrans. Which contains a quick introduction to ABC extracted from my notes (which I still hope to turn into a book!). As well as a longer coverage of Bayesian foundations and computations by David Stenning and David van Dyk.

Distilled News

How to Use IoT Datasets in #AI Applications

Running R scripts within in-database SQL Server Machine Learning

Having all the R functions, all libraries and any kind of definitions (URL, links, working directories, environments, memory, etc) in one file is nothing new, but sometimes a lifesaver.

Random Walk of Pi – Another ggplot2 Experiment

There are so many beautiful “π” arts everywhere, and I wanted to practice ggplot2 by mimicing those arts further more. Another pi art caught my eye is random walk of pi digits. Here’s one of examples in WIRED magazine.