Introduction

As we’re nearing the end of 2017, we’ve come to the 5 year landmark of deep learning really starting to hit the mainstream. For me, I think of AlexNet and the 2012 Imagenet competition as the coming out party (although researchers have definitely been working in this field for quite a bit longer).

5 years. Think about that. It’s been just 5 years and we’ve absolutely revolutionized the way we look at the capabilities of machines, the way we build software (Software 2.0), and the ways we think about creating products and companies (Just ask any VC or startup founder). Tasks that seemed impossible just a decade ago have become tractable, granted you have the appropriate labeled dataset and compute power of course.

In this post, we’ll overview the last couple years in deep learning, focusing on industry applications, and end with a discussion on what the future may hold.

Why the Hype over DL

(Yeah I know, most of us don’t need a graph to tell us that deep learning is kind of a buzz word right now)

I’m sure we’ve all heard our fair share of big statements on the type of impact that AI (specifically ML and more specifically DL) will have.

And yes, while I’m sure we’ve all learned to treat statements like “I used a deep neural network to solve X” with some scrutiny, there’s no denying that the advent of deep learning has fundamentally changed the way we approach creating intelligent systems.

So what is it about deep learning that has made it the centerpiece of all tech buzzwords? In my opinion, it comes down to the ability for deep learning models to perform analysis of forms of data such as speech, text, and images through large labeled datasets, enormous compute power, and effective network architectures.

Speech. Text. Images. Think about those 3 forms of data. Traditionally, these were forms of data that our computer systems didn’t handle well. You could ask a computer to multiply 34,632 by 68,821 and that would be a piece of cake. But, if you asked that same computer to determine whether an image contains a dog or a cat, it was (and still sometimes is) a very difficult task.

As humans, our lives revolve around these 3 data forms. We communicate to each other every day through speech, we convey ideas through text, and we illustrate our world through images. If our end goal is to build *intelligent *AI systems, being able to analyze speech, text, and images is a crucial requirement.

While I wouldn’t say that deep learning is necessarily the right path to that type of artificial general intelligence (AGI) - I’m hesitant about whether compute + data + supervised learning can work for all tasks - I still believe it’s an incredibly transformative technology that is deserving of the hype it receives. Let’s take a look at a couple fields and look at how they’ve been impacted by DL.

Image Processing

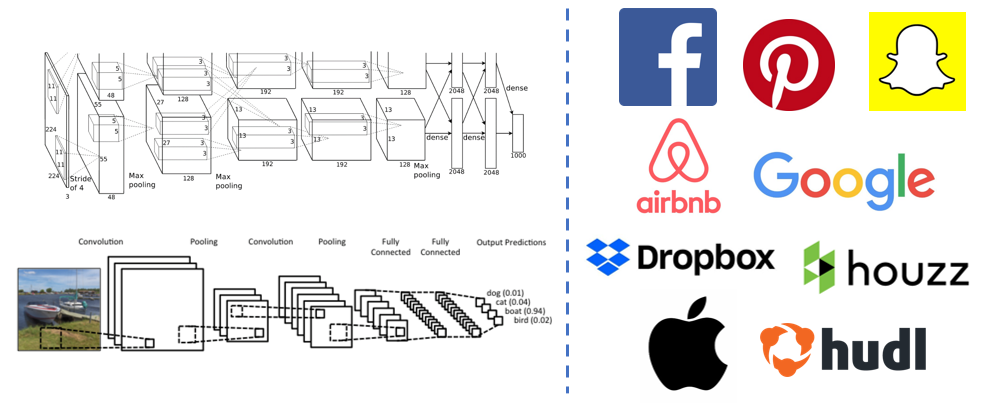

Since we mentioned AlexNet at the beginning of the post, I think it’s only fair that we start with image processing tasks. I think most people would agree that image processing is the field that has been most successfully impacted by deep learning. For most image related tasks, feature extraction methods like SIFT and HOG are being discarded in favor of deep learning models called convolutional neural networks. These are special kinds of neural networks that use learnable convolutional filters to process image data.

Impact on Industry

I want you to think about the biggest companies and hottest startups in tech today. Think about the Big 5, think about companies whose products you use everyday, think about startups that have IPO’d, the ones that probably shouldn’t have, and write them down. Here’s my quick list.

Now, take a highlighter and highlight the ones that have to process some sort of image related data.

Images are such a key part of their products and services that the ability to create deep learning systems to automate the analysis of this data can greatly benefit companies and their products. Let’s look at some of these companies and see how they’ve used CNNs and other DL techniques over the past few years.

-

Dropbox – Just this past year, Dropbox released a blog post that explained how they used deep learning to build an OCR pipeline used on their mobile document scanner feature. The gist of the approach was that they used one system to crop out bounding boxes of distinct words in the image, and another made up of convolutional layers and bi-directional LSTM layers to extract the text contained in the bounding box. An interesting note to make about this particular use case is the latency requirement that Dropbox needed. A lot of times in deep learning, a larger network will often lead to greater accuracy but can come at the cost of slower inference time. This is a tradeoff that is of key importance when thinking about using DL methods in production.

-

Google – An interesting new trend that has come up as a result of the AI revolution is the AI-as-a-service model. While smaller companies may not have the data, compute, or talent necessary to perform image processing at the scale or effectiveness of other companies, there are services like Google Cloud Platform, Amazon Rekognition, Clarifai, and others that offer image understanding through APIs. Google currently uses CNNs as the core component behind their Object Detection API.

-

Facebook - With over 350 million photos uploaded to Facebook each day, it’s clear that intelligent analysis of this data can be beneficial. While Facebook AI Research’s blog has posts that talk about a number of different topics, I’d like to focus on one that looks at the challenges that come with having to analyze images and videos in real-time. In the post, the authors go over how the nature of real-time processing (such as for style transfer) requires lightweight frameworks, like Caffe2Go, as well as model size optimization.

-

Snapchat – While Snap is a little bit more secretive when it comes to telling the public about their ML and Computer Vision techniques, it’s definitely clear that videos and images are essential to the core product. Face detection and filters are areas where DL approaches are possibly being utilized.

-

Pinterest– If you’ve ever used the Pinterest product, you’ll see from the very first moment you open the app that appealing to the user’s visual senses and interests is a key part of user engagement. As explained in this Medium post, Pinterest uses DL to show the user visually similar Pins to the ones they’ve already pinned to their personal boards. While the exact approach doesn’t use CNNs, we see a small neural network and an embedding Pin2Vec component used to rank the related Pins.

-

Hudl– Hudl is a Lincoln based startup that provides tools for coaches and athletes to analyze game footage. This blog post shows how they were able to use CNNs to classify points in sports videos where a specific event (such as a 3-pointer) took place.

-

Airbnb – Images may not be as core to Airbnb’s product as they might be to the products of other companies, but allowing users to upload and view images of available listings is an important part of the user experience. This blog post shows how machine learning techniques are used to classify and rank the picture quality of images that are uploaded. From a product standpoint, this can be helpful when determining which images to show the customer while they are browsing for a listing.

-

Apple – As a company that was traditionally known for its secrecy when it came to its new products and tech, it was refreshing to see that Apple started their own machine learning journal earlier this year. One of the most interesting posts was their description of using deep nets for on-device face detection. Traditional approaches to face detection, such as the Viola-Jones algorithm, have worked to some extent, but it’s interesting to see so many companies wanting to test and see if a deep learning approach can work better than current approaches (and more often than not, it does). Apple’s problem space is also unique because they care not only about low latency predictions, but also about low power consumption since all the computation for the face detection happens on-device.

-

Houzz– Houzz is a startup that offers a platform and community for interior design and decoration. They utilize DL methods for identifying unique pieces of furniture from a picture of the interior of a house. By identifying the type and brand of furniture, Houzz can then search department stores and allow you to buy the product straight from the app.

Important Research Papers

Check out this blog post and this one if you’re interested in more in-depth overviews.

-

AlexNet (2012) - Marked the coming out party for convolutional neural networks. First time that a CNN performed so well on a historically difficult ImageNet dataset.

-

ZFNet (2013) - Showed new techniques for visualizing the inner workings of CNNs.

-

OverFeat (2013) - Object localization and detection using CNNs.

-

R-CNN (2014), Fast R-CNN (2015), and Faster R-CNN (2016) - Models used for object detection tasks.

-

VGGNet (2014) - Simplicity and depth with 13 convolutional layers of 3x3 sized filters.

-

GoogLeNet/Inception (2015) - Novel Inception module that contains differently sized convolution operations as well as one maxpool.

-

ResNet (2015) - New residual block concept that has been transferred to a lot of new network architectures.

-

Mask R-CNN (2017) - Used the advancements in Faster R-CNN to perform pixel level segmentation

Natural Language Processing

When we talk about natural language processing, there are a lot of different tasks that fit under this umbrella. Question answering, machine translation, sentiment analysis, document summarization, the list goes on and on. NLP is a large and broad field that encompasses advancements in linguistics and traditional AI approaches. The application of deep learning to NLP tasks has quite a bit of success, but we also have quite some ways to go.

Impact on Industry

-

Google and Facebook – Improvements in the field of machine translation, creating systems that can translate text into other languages, have been one of the biggest success stories in DL applied to NLP. Google and Facebook have both used an approach called Neural Machine Translation to improve Google Translate and to create accurate translations of Facebook posts.

-

Baidu, Google, Apple, and Amazon - The rise of conversational agents like Siri, Alexa, Cortana, and Google Assistant can be attributed to more advanced speech recognition techniques that involve deep nets. We’ve seen press releases and blog posts from Baidu, Google, Apple, and Amazon. These companies all definitely have their own unique twists on their models, but the general idea of using systems with RNNs, LSTMs, Seq2Seq models, and/or CTC loss functions is applicable across the board.

-

Twitter – With about 350 million tweets sent per day, Twitter definitely has a lot of textual information to parse through. One of their recent blog posts discusses a custom neural net that determines the ranking of a set of tweets to show on a user’s feed.

-

Quora – Earlier this year, Quora released a dataset that contained question pairs, as well as labels for whether or not the two sentences in each pair are duplicates of each other. While it’s unclear what type of system Quora uses behind the scenes to address the duplicate question problem, some sort of ML (or DL) might currently be used.

-

Spotify – Typically used for image processing tasks, CNNs were the DL method of choice for Spotify’s music recommendation system (This post is a bit old by DL standards – 2014 – so it’d be interesting to find out what updates they’ve made). This system was used to augment the collaborative filtering algorithm that has traditionally been used.

-

Salesforce – In 2016, Salesforce acquired MetaMind, a startup headed by Stanford professor Richard Socher. Since then, the group has primarily been doing NLP research, helping set the stage for Einstein, one of Salesforce’s main products. One interesting feature is their text summarization capability that uses both encoder/decoder RNNs as well as reinforcement learning to summarize text articles. The group also has an active blog that is more technically oriented.

Important Papers

-

Word2Vec (2013) - First effective and scalable method of generating dense word vectors from a text corpora.

-

Seq2Seq (2014) - Deep neural net that maps sequences to other sequences. A very general approach that has served as a base for a lot of future breakthroughs in DL applied to NLP. One of the biggest subsequent breakthroughs was the attention mechanism, introduced in this paper.

-

Deep Speech (2014) – Work from authors at Baidu that illustrated the first scalable use of an end-to-end deep learning network architecture for speech recognition. See Deep Speech 2 (2015), Attention-Based SR (2015), and Deep Speech 3 (2017) for advancements that largely stemmed from this paper.

-

Dynamic Memory Networks for NLP (2015) – Applied a neural network architecture to the task of question answering.

-

Neural Machine Translation (2016) - Google’s paper describing their approach to translating text from one language to another.

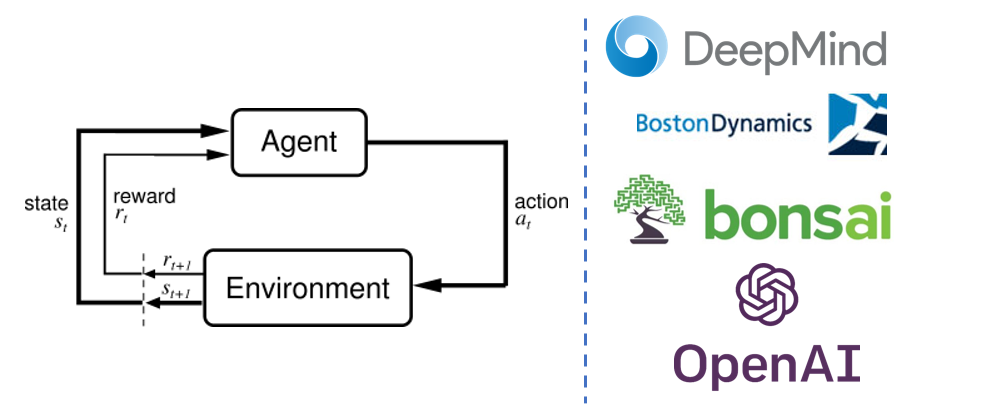

Reinforcement Learning

RL approaches are most commonly seen in the robotics fields, where I think the Holy Grail amounts to creating an agent or robot that is able to learn how to perform any task we want. There have been great advances, evidenced by agents that can beat Atari games and Go, but I think it’ll still be a couple years before we really see an impact on products and services that we use every day.

Impact on Industry

-

Deepmind – Similar to OpenAI, DeepMind isn’t a company in the traditional sense, but they’ve been the most instrumental in pushing RL research. From inventing DQN to creating the famous AlphaGo system, DeepMind has been a key contributor to deep RL research. You can learn more about what they’re currently working on through their blog.

-

BostonDynamics – While it’s unclear how much deep learning is used in their systems (they seem to do a lot more feature engineering), they are a company that has made a lot of cool advances and is definitely one to keep an eye on. And honestly, I had to include them because this is just too cool.

-

OpenAI – While it’s hard to even say whether OpenAI is really part of industry or if they’re just a really well funded research lab, it’s clear that in trying to achieve their mission to “build safe AGI”, the road involves research in RL. They frequently update their blog where they talk about new advancements in self-play, policy optimization, and multiagent cooperation.

-

Bonsai – Bonsai is a Berkeley based startup that wants to abstract away the complexity of building AI systems by creating a platform that businesses can use to create and deploy ML models (with a focus on RL). They also have an active blog that has interesting pieces on RL, industrial AI, and interpretability.

The next ‘Important Papers’ section is interesting to me because RL powered by deep learning is still in its infancy. Reinforcement learning, in general, is difficult. Getting an agent to do what you want it to do in an unknown environment with continuous state and action spaces is not a trivial task by any stretch. While the advancements shown through Atari games and AlphaGo are fantastic breakthroughs, it’s been difficult to see how much of what we’ve learned through current advancements can be transferred to tasks that can be useful in industry.

So, why is this transfer difficult? Well, this is in part because of the way that board games and computer games are structured. In Atari games and Go, the agent is making decisions and actions in a space where the environment is deterministic. We know exactly how the board state will change when the agent decides to place a white stone on row 20, column 13. With a lot of RL tasks in the real world, the environments are a little more difficult. The action space and state space for the agent can be continuous and there is almost an unlimited amount of noise and variability that the agent will encounter. For those interested, check out Andrej Karpathy’s blog post on this distinction.

The difficulties with handling partially observed and indeterministic environments, with continuous action and state spaces makes RL a problem that even DL methods have had trouble with. It’ll be interesting to see how the field progresses. Here are a couple of past advancements.

Important Papers

Check out this blog post if you’re interested in more in depth overviews.

-

Atari with DQN (2013) and subsequent Nature Paper (2015) – First successful use of deep learning in RL. Introduced DQN (Deep Q-Network), which is an end to end RL agent that uses a large neural net to process game states and choose appropriate actions.

-

Asynchronous Methods for Deep RL (2016) – Introduced the A3C algorithm that expanded and improved upon on DQN.

-

AlphaGo (2016) – Described the approach used to create the AlphaGo system that beat Lee Sedol in the summer of 2016. Monte Carlo Tree Search and DNNs were the primary components in the system.

-

AlphaGo Zero (2017) – The latest improvement on AlphaGo, which showed an interesting random play/starting from scratch approach.

Other Important Papers

I don’t think I can do a deep learning overview blog post justice without finding some way to include these next research papers. Even though we can’t point to unique use cases in industry, the following contributions have been critical in pushing state of the art deep learning.

-

Gradient-Based Learning Applied to Document Recognition (1998) – aka LeNet. Yann LeCun’s successful use of CNNs applied to MNIST data. It wasn’t until 2012 with AlexNet that CNNs started to do better on tougher image datasets, like ImageNet. Incredible that this paper is nearly 20 years old now!

-

A Fast Learning Algorithm for DBNs (2006) – A Geoffrey Hinton paper that showed techniques for effectively training deep belief networks (as they were previously referred to).

-

Dropout (2013) - Hugely important regularization technique that drops out random neurons in DNNs to combat the classic issue of overfitting.

-

On the Importance of Initialization and Momentum in DL (2013) – As the title of the paper suggests, the authors discuss SGD and the improvements that can be seen with careful weight initialization and proper momentum tuning.

-

Adam: A Method for Stochastic Optimization (2014) – Adam is one of the most widely used optimization algorithms for training DNNs.

-

How Transferrable are Features in DNNs (2014) - First major study that shed light on the idea that features learned by filters in CNNs could be transferred to other networks and used as effective starting points.

-

Generative Adversarial Networks (2014) - The original GAN paper that introduced the use of discriminator and generator networks to model a data distribution.

-

Neural Turing Machines (2014) – Examined the possible use of external memory coupled with standard DNNs. Work was expanded upon with the Differentiable Neural Computer (2016).

-

Batch Normalization (2015) - Accelerated the training and stability of deep neural networks by addressing the problem of internal covariate shift.

-

Style Transfer (2015) - Showed how you can use deep neural nets to create artificial artistic images.

Going Forward

Technology is notoriously difficult to predict. Quite frankly, I think it’s nearly impossible to predict what the technological landscape will look like 10 or more years into the future. However, when I first thought of making this post, I wanted to not only focus on the advancements over the last half decade, but also to spark a discussion on what type of impact deep learning can have in the future.

Like I mentioned in the Intro, I think deep learning is unique because we finally have ways of understanding speech, text, and images. This opens up so many different problem spaces in a wide variety of fields. Let’s think of a couple.

-

Based on aerial images, farmers can use CNNs to determine locations in their field where more soil or fertilizer is needed.

-

Doctors can use CNNs to help them detect patterns and find abnormalities in X-Rays and other imaging data.

-

Waste management companies can use CNNs to help sort through recycling and garbage debris.

-

Companies can use RNNs to create systems that help facilitate and direct conversations between customer service reps and users to the correct places.

-

Psychologists can use RNNs to help them detect changes or abnormalities in a person’s speech patterns in order to spot symptoms of mental illness or depression.

The capabilities that deep learning methods provide are not just available to the Big 5 or to tech startups exclusively in SF. Given the right amount of data, compute, and a clear end goal (These are not trivial assumptions), I think almost every organization/company/group in the world can make use of this technology.

And yes, this all seems great, but I do agree that for some problem spaces, deep learning is a square peg for a round hole. It’s not the right solution sometimes. While the above are all reasonable application spaces, it’s important for your company/organization to take time to figure out whether deep learning is the solution, or if a simple linear regression + data preprocessing workflow is a better option. For some types of data and for some problem spaces, traditional ML methods will be hugely effective and you should definitely use them.

But when it comes to the really interesting problems in our world today, more often than not they are going to be dealing with speech, text, or images. For those, deep learning is an extremely exciting option and I can’t wait to see how the field evolves over the next 5 years.

Conclusion

Just want to end with one more thought exercise. I was recently listening to an A16Z podcast episode called Platforming the Future, where Tim O’Reilly and Benedict Evans talked about recent waves of progress in tech. It made me wonder about how we’re going to look at deep learning when we look back 20 years from now. Are we going to see it as a 5-10 year tech trend that slowly fizzled out or could it be the starting point on the quest to AGI, the greatest technological advancement in history?

Yes, deep learning is currently a buzz word. Yes, it is hyped. Yes, people use it in situations where they probably shouldn’t. But, as you saw in this post, it is fueling incredible progress in today’s tech world and it is solving real problems that we thought were impossible not too long ago. Seeing what we have accomplished over the last half decade and imagining the problems we’ll solve and the lives we’ll impact over the next half decade, well, that’s just straight up exciting. **

Ways to Keep up With Deep Learning Progress

-

HackerNews - I *guarantee *you there is probably at least one deep learning/machine learning related news story within the top 60 at any given time (There’s one at #18 at the time I’m writing this).

-

ML Subreddit – Surprisingly, you can find a lot of in depth technical discussions here.

-

Twitter - Follow Smerity, Jack Clark, Karpathy, Soumith Chintala, Ian Goodfellow, hardmaru, and I also like to tweet/retweet about ML.

-

Import AI Newsletter – Jack Clark releases a weekly newsletter on artificial intelligence.

-

AI/DL Facebook Group – Very active group where members post anything from news articles to blog posts to general ML questions.

-

Arxiv – Definitely for more advanced practitioners, but searching through the new releases section is a great way to get a sense of where research in the field is headed.

-

ML Github Repo – I like to update this repo with any interesting papers/links/blogs I read about.

Dueces. **

Sources

Written on December 4, 2017