In my post on Recurrent Neural Networks in Tensorflow, I observed that Tensorflow’s approach to truncated backpropagation (feeding in truncated subsequences of length n) is qualitatively different than “backpropagating errors a maximum of n steps”. In this post, I explore the differences, implement a truncated backpropagation algorithm in Tensorflow that maintains the distribution between backpropagated errors, and ask whether one approach is better than the other.

I conclude that:

-

Because a well-implemented evenly-distributed truncated backpropagation algorithm would run about as fast as full backpropagation over the sequence, and full backpropagation performs slightly better, it is most likely not worth implementing such an algorithm.

-

The discussion and preliminary experiments in this post show that n-step Tensorflow-style truncated backprop (i.e., with num_steps = n) does not effectively backpropagate errors the full n-steps. Thus, if you are using Tensorflow-style truncated backpropagation and need to capture n-step dependencies, you may benefit from using a num_steps that is appreciably higher than n in order to effectively backpropagate errors the desired n steps.

Differences in styles of truncated backpropagation

Suppose we are training an RNN on sequences of length 10,000. If we apply non-truncated backpropagation through time, the entire sequence is fed into the network at once, the error at time step 10,000 will be back propagated all the way back to time step 1. The two problems with this are that it is (1) expensive to backpropagate the error so many steps, and (2) due to vanishing gradients, backpropagated errors get smaller and smaller layer by layer, which makes further backpropagation insignificant.

To deal with this, we might implement “truncated” backpropagation. A good description of truncated backpropagation is provided in Section 2.8.6 of Ilya Sutskever’s Ph.D. thesis:

“[Truncated backpropagation] processes the sequence one timestep at a time, and every k1 timesteps, it runs BPTT for k2 timesteps…”

Tensorflow-style truncated backpropagation uses k1 = k2 (= num_steps). See Tensorflow api docs. The question this post addresses is whether setting k1 = 1 achieves better results. I will deem this “true” truncated backpropagation, since every error that can be backpropagated k2 steps is backpropagated the full k2 steps.

To understand why these two approaches are qualitatively different, consider how they differ on sequences of length 49 with backpropagation of errors truncated to 7 steps. In both, every error is backpropagated to the weights at the current timestep. However, in Tensorflow-style truncated backpropagation, the sequence is broken into 7 subsequences, each of length 7, and only 7 over the errors are backpropagated 7 steps. In “true” truncated backpropagation, 42 of the errors can be backpropagated for 7 steps, and 42 are. This may lead to different results because the ratio of 7-step to 1-step errors used to update the weights is significantly different.

To visualize the difference, here is how true truncated backpropagation looks on a sequence of length 6 with errors truncated to 3 steps:

And here is how Tensorflow-style truncated backpropagation looks on the same sequence:

Experiment design

To compare the performance of the two algorithms, I write implement a “true” truncated backpropagation algorithm and compare results. The algorithms are compared on a vanilla-RNN, based on the one used in my prior post, Recurrent Neural Networks in Tensorflow I, except that I upgrade the task and model complexity, since the basic model from my prior post learned the simple patterns in the toy dataset very quickly. The task will be language modeling on the ptb dataset, and to match the increased complexity of this task, I add an embedding layer and dropout to the basic RNN model.

I compare the best performance of each algorithm on the validation set after 20 epochs for the cases below. In each case, I use an AdamOptimizer (it does better than other optimizers in preliminary tests) and learning rates of 0.003, 0.001 and 0.0003.

5-step truncated backpropagation

-

True, sequences of length 20

-

TF-style

10-step truncated backpropagation

-

True, sequences of length 30

-

TF-style

20-step truncated backpropagation

-

True, sequences of length 40

-

TF-style

40-step truncated backpropagation

Code

Imports and data generators

1 | |

Model

1 | |

def reset_graph(): if ‘sess’ in globals() and sess: sess.close() tf.reset_default_graph()

1 | |

reset_graph() g = build_graph(num_steps = 40, bptt_steps = 20) sess = tf.InteractiveSession() sess.run(tf.initialize_all_variables())

X, Y = next(reader.ptb_iterator(train_data, batch_size=200, num_steps=40))

1 | |

%%timeit gvs_tf = sess.run(g[‘gvs_tf_style’], feed_dict={g[‘x’]:X, g[‘y’]:Y, g[‘dropout’]: 1})

10 loops, best of 3: 80.2 ms per loop

1 | |

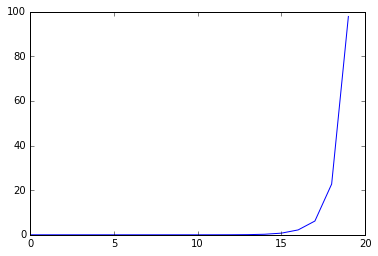

vanishing_grads, gvs = sess.run([g[‘vanishing_grads’], g[‘gvs_true_bptt’]], feed_dict={g[‘x’]:X, g[‘y’]:Y, g[‘dropout’]: 1}) vanishing_grads = np.array(vanishing_grads) weights = gvs[1][1]

sum all the grads from each loss node

vanishing_grads = np.sum(vanishing_grads, axis=0)

now calculate the l1 norm at each bptt step

vanishing_grads = np.sum(np.sum(np.abs(vanishing_grads),axis=1),axis=1)

vanishing_grads

1 | |

for i in range(len(vanishing_grads) - 1): print(vanishing_grads[i+1] / vanishing_grads[i])

1 | |

Quick accuracy test

A sanity check to make sure the true truncated backpropagation algorithm is doing the right thing.

1 | |

Experiment

1 | |

Results

1 | |

Here are the results in a table:

Minimum validation loss achieved in 20 epochs:

|BPTT Steps|5||| |Learning Rate|0.003|0.001|0.0003| |True (20-seq)|5.12|5.01|5.09| |TF Style|5.21|5.04|5.04| |||| |BPTT Steps|10||| |Learning Rate|0.003|0.001|0.0003| |True (30-seq)|5.07|5.00|5.12| |TF Style|5.15|5.03|5.05| |||| |BPTT Steps|20||| |Learning Rate|0.003|0.001|0.0003| |True (40-seq)|5.05|5.00|5.15| |TF Style|5.11|4.99|5.08| |||| |BPTT Steps|40||| |Learning Rate|0.003|0.001|0.0003| |TF Style|5.05|4.99|5.15|

Discussion

As you can see, true truncated backpropagation seems to have an advantage over Tensorflow-style truncated backpropagation when truncating errors at the same number of steps. However, this advantage completely disappears (and actually reverses) when comparing true truncated backpropagation to Tensorflow-style truncated backpropagation that uses the same sequence length.

This suggests two things:

-

Because a well-implemented true truncated backpropagation algorithm would run about as fast as full backpropagation over the sequence, and full backpropagation performs slightly better, it is most likely not worth implementing an efficient true truncated backpropagation algorithm.

-

Since true truncated backpropagation outperforms Tensorflow-style truncated backpropagation when truncating errors to the same number of steps, we might conclude that Tensorflow-style truncated backpropagation does not effectively backpropagate errors the full n-steps. Thus, if you need to capture n-step dependencies with Tensorflow-style truncated backpropagation, you may benefit from using a num_steps that is appreciably higher than n in order to effectively backpropagate errors the desired n steps.

Edit: After writing this post, I discovered that this distinction between styles of truncated backpropagation is discussed in Williams and Zipser (1992), Gradient-Based Learning Algorithms for Recurrent Networks and Their Computation Complexity. The authors refer to the “true” truncated backpropagation as “truncated backpropagation” or BPTT(n) [or BPTT(n, 1)], whereas they refer to Tensorflow-style truncated backpropagation as “epochwise truncated backpropagation” or BPTT(n, n). They also allow for semi-epochwise truncated BPTT, which would do a backward pass more often than once per sequence, but less often than all possible times (i.e., in Ilya Sutskever’s language used above, this would be BPTT(k2, k1), where 1 < k1 < k2).

In Williams and Peng (1990), An Efficien Gradient-Based Algorithm for On-Line Training of Recurrent Network Trajectories, the authors conduct a similar experiment to the one in the post, and reach similar conclusions. In particular, Williams Peng write that: “The results of these experiments have been that the success rate of BPTT(2h; h) is essentially identical to that of BPTT(h)”. In other words, they compared “true” truncated backpropagation, with h steps of truncation, to BPTT(2h, h), which is similar to Tensorflow-style backpropagation and has 2h steps of truncation, and found that they performed similarly.