Since the original Artistic style transfer and the subsequent Torch implementation of the algorithm by Justin Johnson were released I’ve been playing with various ways to use the algorithm in other ways. Here’s a quick dump of the results of my experiments. None of these are particularily rigorous and I think there’s plenty of room for improvement.

Super resolution

I was wondering if you could use style transfer to create super resolution images. I.e. use a low resolution image of something as the content image and a high-resolution image of something similar as “style”. The result looks as follows:

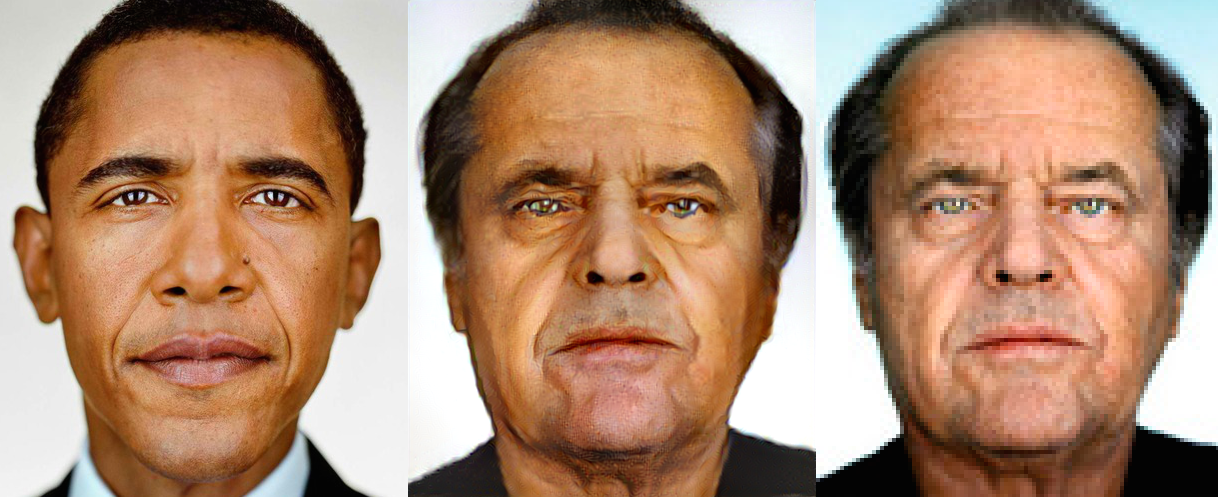

Left: High-res image of Obama Right: Low-res image of Nicholson Middle: After style transfer

Conclusion: Yeah, sort of. You get some interesting artefacts.

Style drift

One of the things that struck me about the results of style transfer (see for example this excellent, comprehensive experiment by Kyle McDonald ) is that a single iteration of style transfer mostly transfers texture, i.e. high frequency correlation. The result still maintains most of the exact geometries of the photo, but painted with similar visual components from the style image.

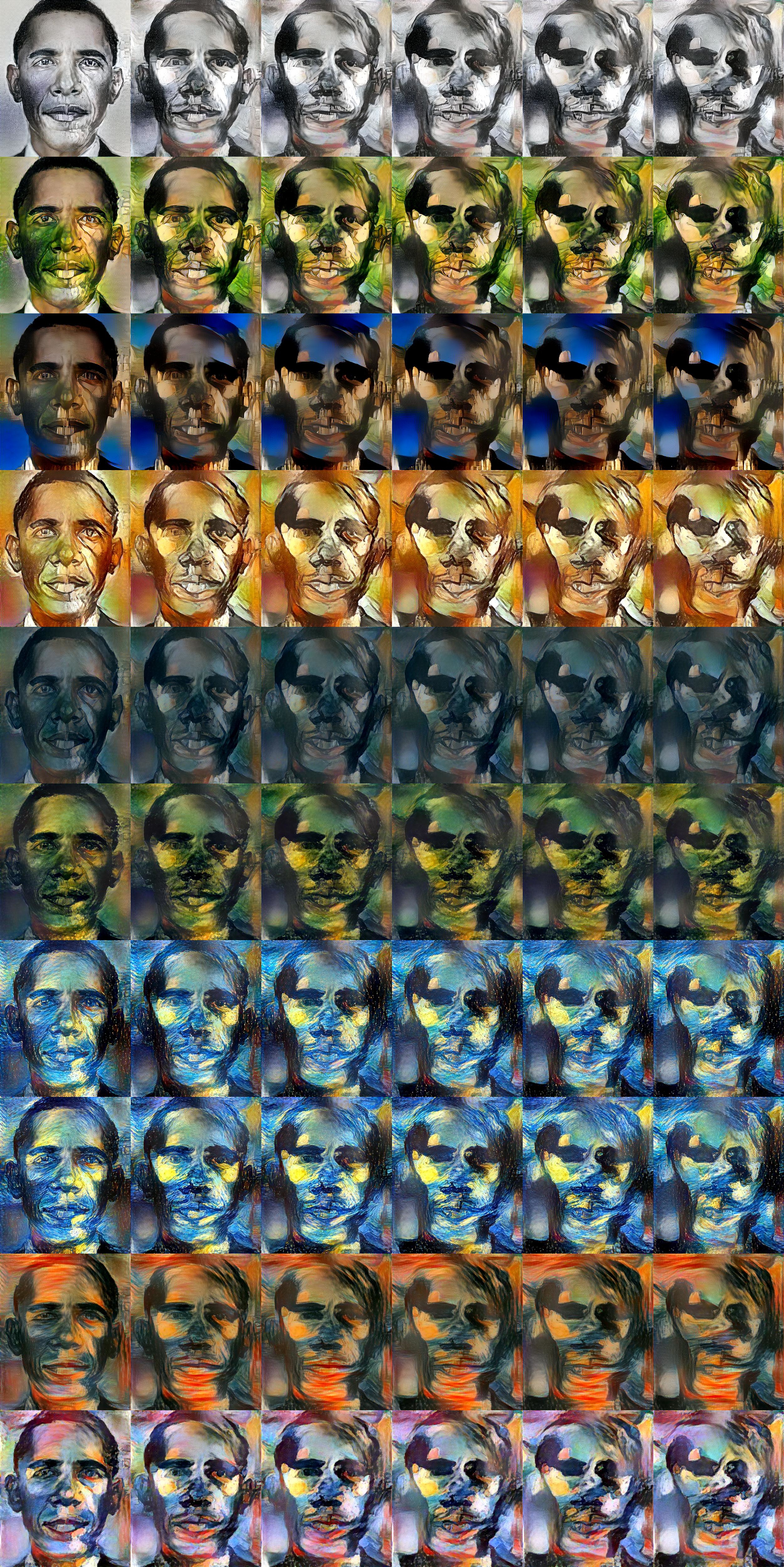

In this style drift experiment i basically apply different styles iteratively to let the image drift from it’s original. I.e. say you have 4 styles, you apply 1 then 2 then 3 then 4 then 1 again and so forth. Each style pushes in a different direction - like a game of telephone - by the time you get back to the same style the image is significantly (but not randomly) distorted. It’s an interesting way to remix different aspects of different styles.

The drift is more striking when viewed as a video:

Update: Jan 3rd 2016:

Images from top-level activations only

The paper covers this but I thought it would be fun to try regenerating an image by using only the highest layer content activations with no style. Interestingly it’s kind of a nice effect actually.

Also makes a cool video. You can see that the minimization does not by any means go linearly to the target.

Localized style

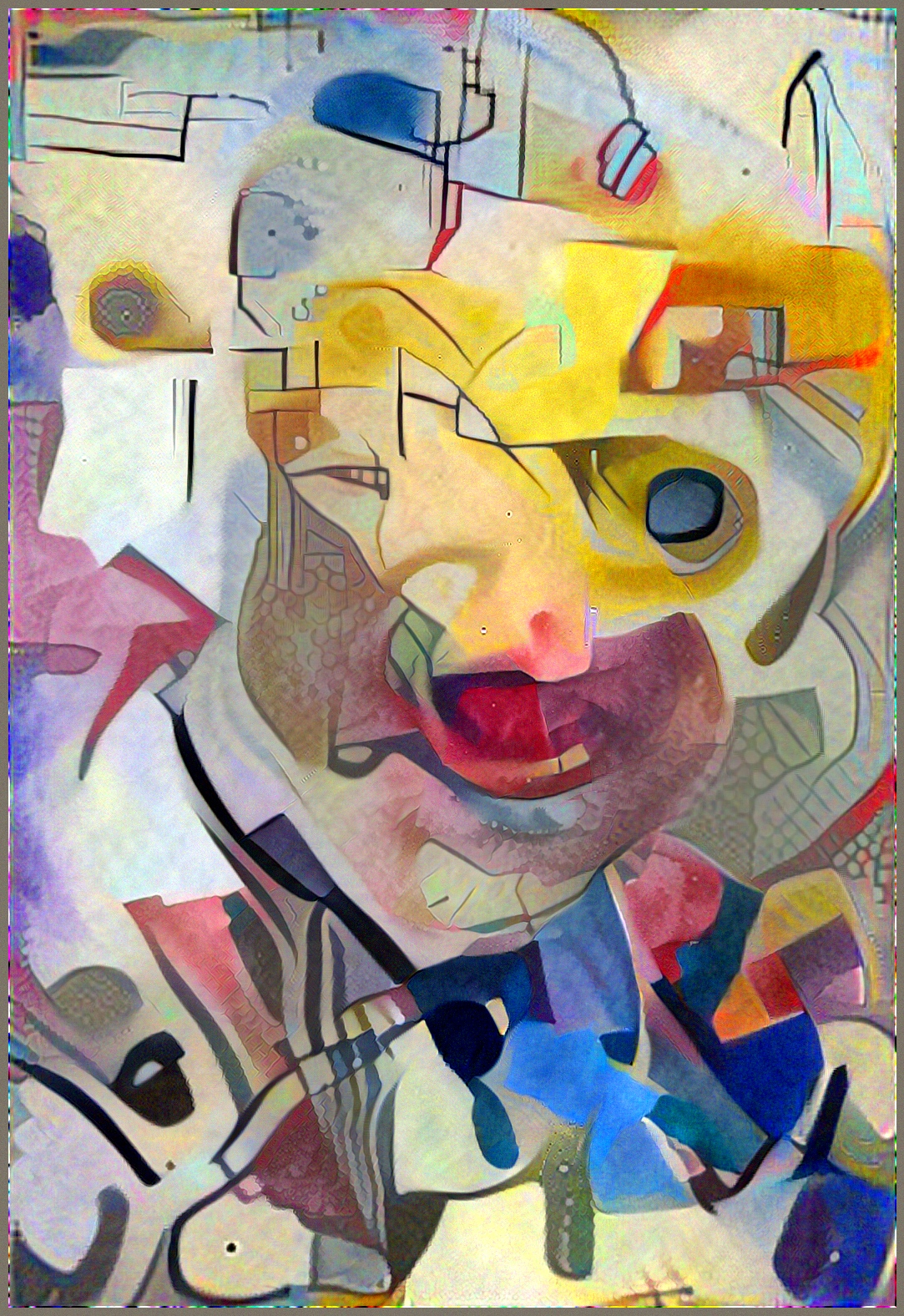

The original implementations were highly limited by resolution (I’d run out of GPU RAM on my Tesla for anything larger than 500x500 or so) so I started hacking one of the implementations to use a moving patch instead. The patch moves around in the target image and proportionally in the content/style images. This leads to an interesting effect where suddenly the style is a little bit location dependent - essentially now the content transfer is somewhere between pure style (no locality at all) and pure content (high locality due to the locality of the convolutional filter banks). This leads to some fun side-effects:

Here is an image generated by having no content image and transferring localized style from a natural photo:

I really like this effect actually.

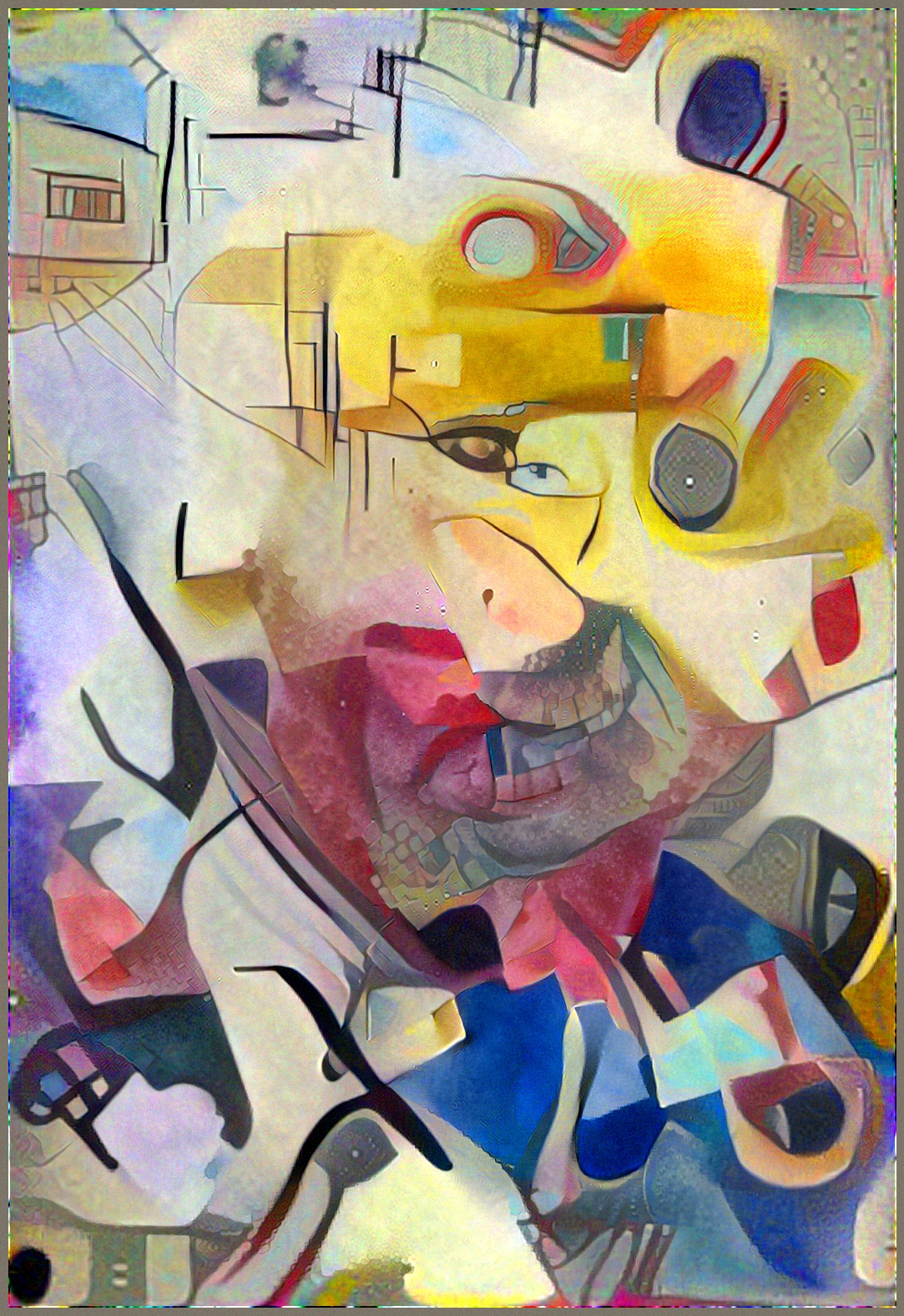

Multiscale

Making images large and using a patch works great but of course now the scale of the style features transferred now is getting smaller compared to the size of the image. In order to get bigger features transferred we need multiscale style transfer. I ended up implementing this in a stochastic way where each batch step not only the position of the patch gets drawn out of a hat but also the scale. Everything is then rescaled to the native size of the image and applied. This seems to work quite nicely although I’m having some strange overflow effect here and there that I haven’t quite ironed out yet. (click for full res)

I still get some strange artifacts I need to resolve but overall i’m happy with the higher res versions. The code for this is a fork of Anders Larsen’s python implementation in DeepPy. I forked and extended his code to add the multiscale, highres stuff.

Style transfer zoom movie.

Hey this worked really well for DeepDream - why not try it for style transfer?

Other parameters:

https://youtu.be/gOSCxHNvUFs

https://youtu.be/XxU5CbY3004

https://youtu.be/GXQqzC2oxDI