If I go there will be trouble…

|If I go there will be trouble……and if I stay it will be double.| |

|

Is Data Scientist a useless job title?

Data science can be defined as either the intersection or union of software engineering and statistics. In recent years, the field seems to be gravitating towards the broader unifying definition, where everyone who touches data in some way can call themselves a data scientist. Hence, while many people whose job title is Data Scientist do very useful work, the title itself has become fairly useless as an indication of what the title holder actually does. This post briefly discusses how we got to this point, where I think the field is likely to go, and what data scientists can do to remain relevant.

Efficient Guttering

What’s the most efficient shape to make a gutter?

My Open-Source Machine Learning Masters (in Casablanca, Morocco)

The Open-Source Machine Learning Masters (OSMLM) is a self-curated deep-dive into select topics in machine learning and distributed computing. Educational resources are derived from online courses (MOOCs), textbooks, predictive modeling competitions, academic research (arXiv), and the open-source software community. In machine learning, both the quantity and quality of these resources - all available for free or at a trivial cost - is truly f*cking amazing.

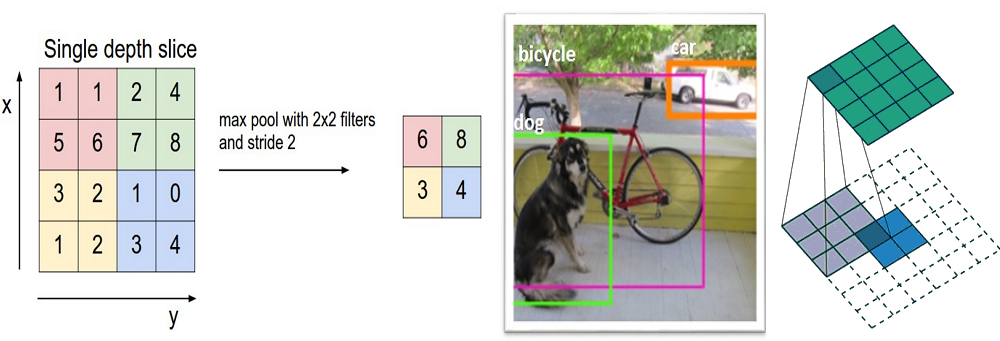

A Beginner's Guide To Understanding Convolutional Neural Networks Part 2

Talk: Building Machines that Imagine and Reason

I am excited to be one the speakers at this year’s Deep Learning Summer School in Montreal on the 6th August 2016.

Decision Trees Tutorial

Would you survive a disaster?

Written Memories: Understanding, Deriving and Extending the LSTM

When I was first introduced to Long Short-Term Memory networks (LSTMs), it was hard to look past their complexity. I didn’t understand why they were designed the way they were designed, just that they worked. It turns out that LSTMs can be understood, and that, despite their superficial complexity, LSTMs are actually based on a couple incredibly simple, even beautiful, insights into neural networks. This post is what I wish I had when first learning about recurrent neural networks (RNNs).

Re-work Interview Questions

I’m talking at the Re-work event on deep learning in London on 22-23 September. They’ve asked a few questions for a pre-event interview. The blog post of the interview is hereI’ve reposted my answers below.

Recurrent Neural Networks in Tensorflow II

This is the second in a series of posts about recurrent neural networks in Tensorflow. The first post lives here. In this post, we will build upon our vanilla RNN by learning how to use Tensorflow’s scan and dynamic_rnn models, upgrading the RNN cell and stacking multiple RNNs, and adding dropout and layer normalization. We will then use our upgraded RNN to generate some text, character by character.