Neural Task Planning with And-Or Graph Representations

Some clues that this study has big big problems

Paul Alper writes:

Distilled News

Apache NiFi

Synesthesia: The Sound of Style

Are fashion stylists musicians? We think so, even though they may not know it!

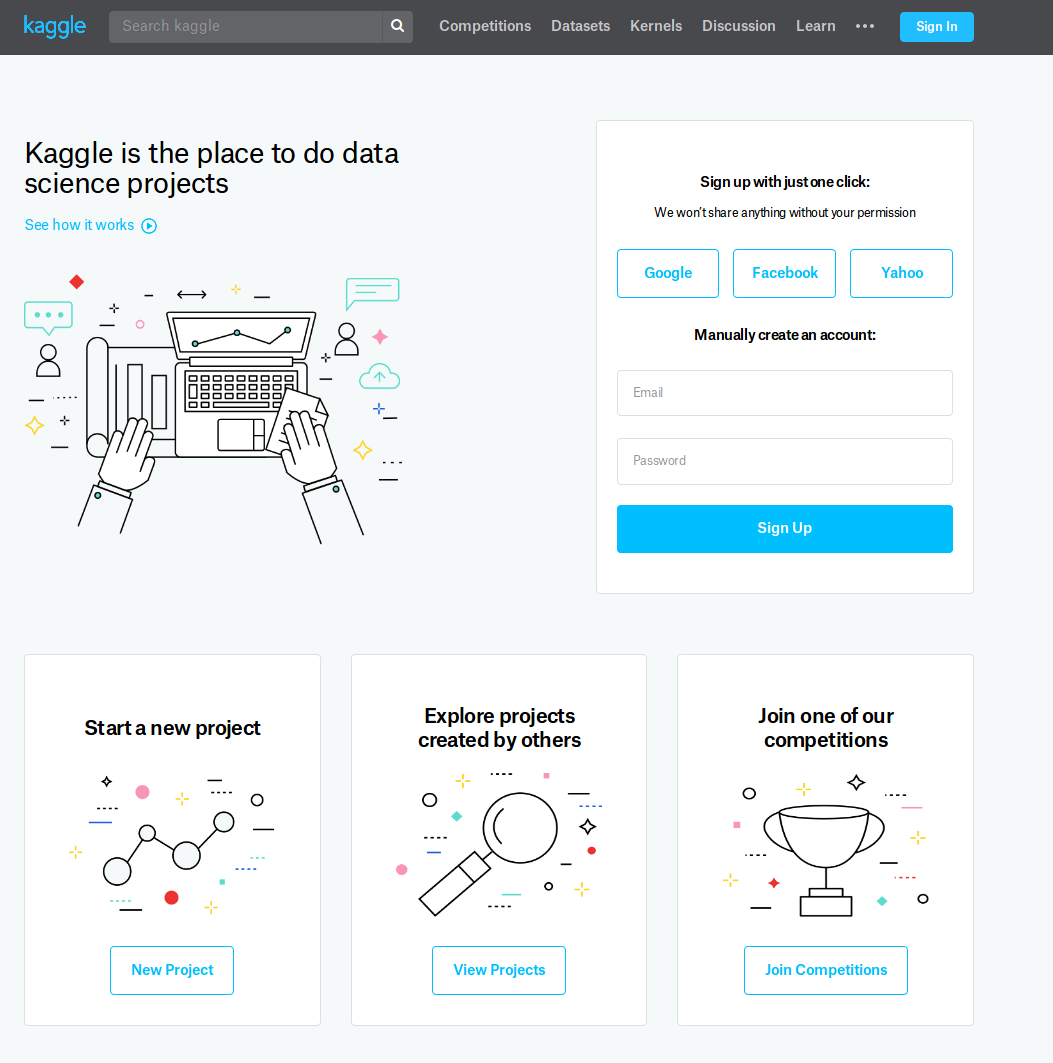

Use Kaggle to start (and guide) your ML/ Data Science journey — Why and How

https://www.kaggle.com/

https://www.kaggle.com/

If you did not already know

Deep Local Binary Patterns (Deep LBP)  Local Binary Pattern (LBP) is a traditional descriptor for texture analysis that gained attention in the last decade. Being robust to several properties such as invariance to illumination translation and scaling, LBPs achieved state-of-the-art results in several applications. However, LBPs are not able to capture high-level features from the image, merely encoding features with low abstraction levels. In this work, we propose Deep LBP, which borrow ideas from the deep learning community to improve LBP expressiveness. By using parametrized data-driven LBP, we enable successive applications of the LBP operators with increasing abstraction levels. We validate the relevance of the proposed idea in several datasets from a wide range of applications. Deep LBP improved the performance of traditional and multiscale LBP in all cases. …

Local Binary Pattern (LBP) is a traditional descriptor for texture analysis that gained attention in the last decade. Being robust to several properties such as invariance to illumination translation and scaling, LBPs achieved state-of-the-art results in several applications. However, LBPs are not able to capture high-level features from the image, merely encoding features with low abstraction levels. In this work, we propose Deep LBP, which borrow ideas from the deep learning community to improve LBP expressiveness. By using parametrized data-driven LBP, we enable successive applications of the LBP operators with increasing abstraction levels. We validate the relevance of the proposed idea in several datasets from a wide range of applications. Deep LBP improved the performance of traditional and multiscale LBP in all cases. …

Document worth reading: “A Comparative Study on using Principle Component Analysis with Different Text Classifiers”

Text categorization (TC) is the task of automatically organizing a set of documents into a set of pre-defined categories. Over the last few years, increased attention has been paid to the use of documents in digital form and this makes text categorization becomes a challenging issue. The most significant problem of text categorization is its huge number of features. Most of these features are redundant, noisy and irrelevant that cause over fitting with most of the classifiers. Hence, feature extraction is an important step to improve the overall accuracy and the performance of the text classifiers. In this paper, we will provide an overview of using principle component analysis (PCA) as a feature extraction with various classifiers. It was observed that the performance rate of the classifiers after using PCA to reduce the dimension of data improved. Experiments are conducted on three UCI data sets, Classic03, CNAE-9 and DBWorld e-mails. We compare the classification performance results of using PCA with popular and well-known text classifiers. Results show that using PCA encouragingly enhances classification performance on most of the classifiers. A Comparative Study on using Principle Component Analysis with Different Text Classifiers

R Packages worth a look

NCA Calculations and Population Model Diagnosis (ncappc)A flexible tool that can perform (i) traditional non-compartmental analysis (NCA) and (ii) Simulation-based posterior predictive checks for population …

Access Amazon S3 data managed by AWS Glue Data Catalog from Amazon SageMaker notebooks

If you’re a data scientist at one of the few companies with massive corporate datasets defined in an integrated system, and can analyze the data easily, lucky you! If you’re like the rest of the world, and need some help joining disparate datasets, analyzing the data, and visualizing the analysis in preparation for your machine learning work, then read on.

Whats new on arXiv

Feature selection for transient stability assessment based on kernelized fuzzy rough sets and memetic algorithm