Introduction

What if I say that there is a way for you to become a data scientist, regardless of your programming skills! Furthermore, most people think that being proficient in a programming knowledge is a must-have for becoming a data scientist. Well, this statement is not completely true! Data science is not all about programming anymore.

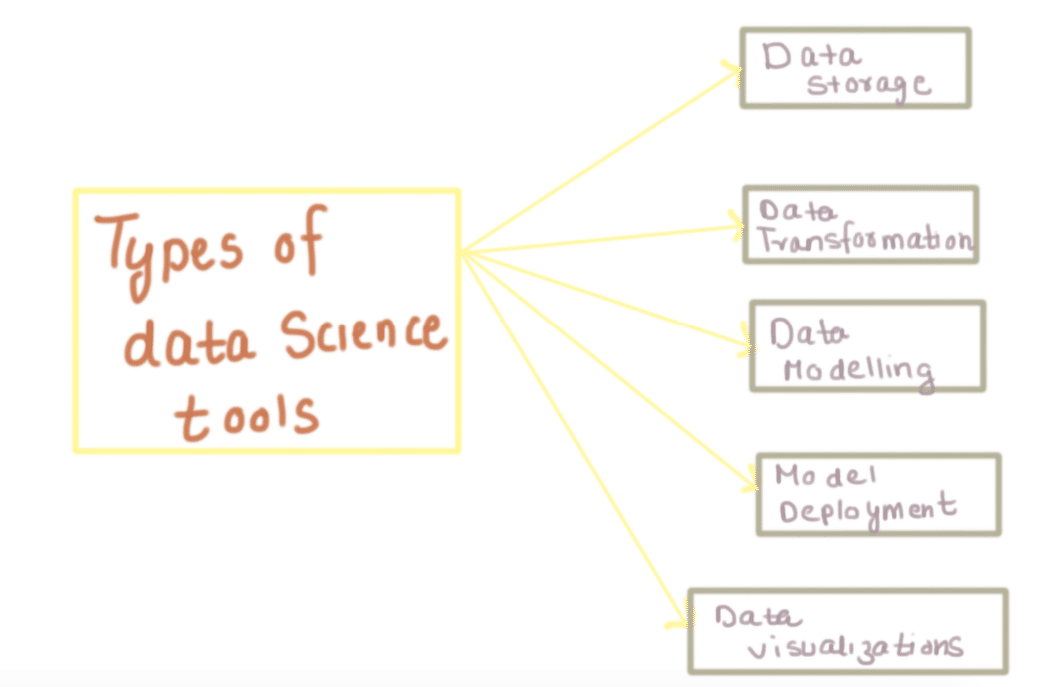

In this article, we will be looking at different tools for data scientists. Different tools cover different aspects of data science, hence data scientists can make their work easy by employing these tools for different tasks. Let us understand more about these tools in detail.

What are data science tools?

These are tools that typically obviate the programming aspect and provide user-friendly GUI (Graphical User Interface) hence anyone with minimal knowledge of algorithms can simply use them to build high-quality machine learning models.

Many companies (especially startups) have recently launched GUI driven data science tools. These tools cover different aspects of data science like data storage, data manipulation, data modeling etc.

Why data science tools?

-

No programming experience required

-

Better work management

-

Faster results

-

Better quality check mechanism

-

Process Uniformity

Different Data science tools

Data Storage

1. Apache Hadoop

Apache Hadoop is a java based free software framework that can effectively store a large amount of data in a cluster. This framework runs in parallel on a cluster. Hence, it has the ability to allow us to process data across all nodes. Also, Hadoop Distributed File System (HDFS) is the storage system of Hadoop which splits big data and distribute across many nodes in a cluster. This also replicates data in a cluster thus providing high availability.

2. Microsoft HDInsight

It is a Big Data solution from Microsoft powered by Apache Hadoop which is available as a service in the cloud. HDInsight uses Windows Azure Blob storage as the default file system. Also, this also provides high availability with low cost.

3. NoSQL

While the traditional SQL can be effectively used to handle a large amount of structured data, we need NoSQL (Not Only SQL) to handle unstructured data. Also, NoSQL databases store unstructured data with no particular schema. Furthermore, each row can have its own set of column values. Hence, NoSQL gives better performance in storing a massive amount of data. There are many open-source NoSQL DBs available to analyze Big Data.

4. Hive

This is a distributed data management for Hadoop. Also, this supports SQL-like query option HiveSQL (HSQL) to access big data. This can be primarily used for Data mining purpose. Furthermore, this runs on top of Hadoop.

5. Sqoop

This is a tool that connects Hadoop with various relational databases to transfer data. This can be effectively used to transfer structured data to Hadoop or Hive.

6. PolyBase

This works on top of SQL Server 2012 Parallel Data Warehouse (PDW) and is used to access data stored in PDW. Furthermore, PDW is a data warehousing appliance built for processing any volume of relational data and provides integration with Hadoop allowing us to access non-relational data as well.

Data transformation

1. Informatica — PowerCenter

Informatica is a leader in Enterprise Cloud Data Management with more than 500 global partners and more than 1 trillion transactions per month. It is a software Development Company that was found in 1993 with its headquarters in California, United States. In addition, It has a revenue of $1.05 billion and a total employee headcount of around 4,000.

PowerCenter is a product which was developed by Informatica for data integration. It supports data integration lifecycle and also delivers critical data and values to the business. Furthermore, PowerCenter supports a huge volume of data and any data type and any source for data integration.

2. IBM — Infosphere Information Server

IBM is a multinational Software Company found in 1911 with its headquarters in New York, U.S. and it has offices across more than 170 countries. It has a revenue of $79.91 billion as of 2016 and total employees currently working are 380,000.

Infosphere Information Server is a product by IBM that was developed in 2008. It is a leader in the data integration platform which helps to understand and deliver critical values to the business. It is mainly designed for Big Data companies and large-scale enterprises.

3. Oracle Data Integrator

Oracle is an American multinational company with its headquarters in California and was found in 1977. It has a revenue of $37.72 billion as of 2017 and a total employee headcount of 138,000.

Oracle Data Integrator (ODI) is a graphical environment to build and manage data integration. This product is suitable for large organizations which have frequent migration requirement. It is a comprehensive data integration platform which supports high volume data, SOA enabled data services.

Key Features:

-

Oracle Data Integrator is a commercial licensed RTL tool.

-

Improves user experience with re-design of flow based interface.

-

It supports declarative design approach for data transformation and integration process.

-

Faster and simpler development and maintenance.

4. AB Initio

Ab Initio is an American private enterprise Software Company in Massachusetts, USA. It has offices worldwide in the UK, Japan, France, Poland, Germany, Singapore and Australia. Ab Initio specialises in application integration and high volume data processing.

It contains six data processing products such as Co>Operating System, The Component Library, Graphical Development Environment, Enterprise Meta>Environment, Data Profiler, and Conduct>It. “Ab Initio Co>Operating System” is a GUI based ETL tool with a drag and drop feature.

Key Features:

-

Ab Initio has a commercial license and a most costlier tool in the market.

-

The basic features of Ab Initio are easy to learn.

-

Ab Initio Co>Operating system provides a general engine for data processing and communication between rest of the tools.

-

Ab Initio products are provided on a user-friendly platform for parallel data processing applications.

5. Clover ETL

CloverETL, by a company named Javlin, with offices across the globe like USA, Germany, and the UK provides services like data processing and data integration.

In addition, CloverETL is a high-performance data transformation and robust data integration platform. Therefore, It can process a huge volume of data and transfers the data to various destinations. Also, it consists of three packages such as — CloverETL Engine, CloverETL Designer, and CloverETL Server.

Key Features:

-

CloverETL is a commercial ETL software.

-

CloverETL has a Java-based framework.

-

Easy to install and simple user interface.

-

Combines business data in a single format from various sources.

-

It also supports Windows, Linux, Solaris, AIX and OSX platforms.

-

It is for data transformation, data migration, data warehousing and data cleansing.

Modelling Tools

1. Infosys Nia

Infosys Nia is a knowledge-based AI platform, built by Infosys in 2017 to collect and aggregate organisational data from people, processes and legacy systems into a self-learning knowledge base.

It is to tackle difficult business tasks such as forecasting revenues and what products need to be built, understanding customer behaviour and more.

Infosys Nia enables businesses to manage customer inquiries easily, with a secure order-to-cash process with risk awareness delivered in real-time.

2. H20 Driverless

H2O is an open source software tool, consisting of a machine learning platform for businesses and developers.

H2O.ai is in the Java, Python and R programming languages. The platform is built with the languages with which developers are familiar with in order to make it easy for them to apply machine learning and predictive analytics.

Also, H2O can analyze datasets in the cloud and Apache Hadoop file systems. It is available on Linux, MacOS and Microsoft Windows operating systems.

3. Eclipse Deep learning 4j

Eclipse Deeplearning4j is an open-source deep-learning library for the Java Virtual Machine. It can serve as a DIY tool for Java, Scala and Clojure programmers working on Hadoop and other file systems. It also allows developers to configure deep neural networks and is suitable for use in business environments on distributed GPUs and CPUs.

The project, by a San Francisco company called Skymind, offers paid support, training and enterprise distribution of Deeplearning4j.

4. Torch

Torch is a scientific computing framework, an open source machine learning library and a scripting language over the Lua programming language. It also provides an array of algorithms for deep machine learning. Furthermore, the torch is used by the Facebook AI Research Group and was previously used by DeepMind before it was acquired by Google and moved to TensorFlow.

5. IBM Watson

IBM is a big player in the field of AI, with its Watson platform housing an array of tools designed for both developers and business users.

Available as a set of open APIs, Watson users will have access to lots of sample code, starter kits and can build cognitive search engines and virtual agents.

Watson also has a chatbot building platform aimed at beginners, which requires little machine learning skills. Watson will even provide pre-trained content for chatbots to make training the bot much quicker.

Model Deployment

1. ML Flow

MLflow is an open source platform for managing the end-to-end machine learning lifecycle. It tackles three primary functions:

-

Tracking experiments to record and compare parameters and results (MLflow Tracking).

-

Packaging ML code in a reusable, reproducible form in order to share with other data scientists or transfer to production (MLflow Projects).

-

Managing and deploying models from a variety of ML libraries to a variety of model serving and inference platforms (MLflow Models).

MLflow is library-agnostic. Also, you can use it with any machine learning library, and in any programming language, since all functions are accessible through a REST API and CLI. For convenience, the project also includes a Python API, R API, and Java API.2. Kubeflow

2. Kubeflow

The Kubeflow project is for making deployments of machine learning (ML) workflows on Kubernetes simple, portable and scalable. The goal is not to recreate other services, but also to provide a straightforward way to deploy best-of-breed open-source systems for ML to diverse infrastructures.

The basic workflow is:

-

Download the Kubeflow scripts and configuration files.

-

Customize the configuration.

-

Run the scripts to deploy your containers to your chosen environment.

In addition, you adapt the configuration to choose the platforms and services that you want to use for each stage of the ML workflow: data preparation, model training, prediction serving, and service management.

3. H20 AI

H2O is a fully open source, distributed in-memory machine learning platform with linear scalability. H2O’s supports the most widely used statistical & machine learning algorithms including gradient boosted machines, generalized linear models, deep learning and more. Also, H2O has an industry leading AutoML functionality that automatically runs through all the algorithms and their hyperparameters to produce a leaderboard of the best models. Furthermore, the H2O platform is used by over 14,000 organizations globally and is extremely popular in both the R & Python communities.

4. Domino Data Lab

Domino provides an open, unified data science platform to build, validate, deliver, and monitor models at scale. This accelerates research, sparks collaboration, increases iteration speed, and removes deployment friction to deliver impactful models.

5. Dataiku

Dataiku DSS is the collaborative data science software platform for teams of data scientists, data analysts, and engineers to explore, prototype, build and deliver their own data products more efficiently. Dataiku’s single, collaborative platform powers both self-service analytics and also the operationalization of machine learning models in production. Hence, in simple words, Data Science Studio (DSS) is a software platform that aggregates all the steps and big data tools necessary to get from raw data to production-ready applications. Furthermore, it shortens the load-prepare-test-deploy cycles required to create data-driven applications. Also, thanks to its visual and interactive workspace, it is accessible to both Data Scientists and Business Analysts

Data Visualisation

1. Tableau

One of the major tool in this category. Tableau is famous for his drag and drops features in User Interface. In addition, this data visualization tool is free for some basic versions. Also, it supports multi-format data like xls,csv, XML , database connections etc . Furthermore, for more information on Tableau, You can reach out at Tableau official website.

2. Qlik View

The Qlik view is again a powerful BI tool for decision making. In addition, It is easily configurable and Deployable. Also, it is scalable with few constraints of RAM. The most loving features of Qlik view is visual drill down. In case you want to read more about Qlik View, You can reach out Qlik View official website. Here you can find all installation guide with other details.

3. Qlik Sense

Another powerful tool from Qlik family. Its popularity is because of its user-friendly features like drag and drop. Also, it is designed in such a manner that even a business user can use it. Furthermore, its cloud-based infrastructure makes it strong among other data visualizations tool. You can download the free desktop version of Qlik Sense and use it.

4. SAS Visual Analytics

SAS VA is not only a data visualization tool but also it is capable of predictive modeling and forecasting. It is easy to operate with drag and drop features. Also, there is awesome community support for SAS Visual Analytics. In addition, you can directly reach SAS Visual Analytics from here.

5. D3.js

D3 is a javascript library. Furthermore, It is an open source library. You can use to bind arbitrary data with the Document Object Model. As it is an open source library so you can find a rich tutorial on D3.js. Also, here is the link for the home page of D3.js.

####

Conclusion

The success of any modern data analytics strategy depends on full access to all data. Solutions like above simplify and accelerate decision making from massive amounts of data from any data source. Furthermore, we can execute any machine learning models you’ve developed to deepen your knowledge of and engagement with your customers, or other important initiatives.

Whether you are a scientist, a developer or, simply, a data enthusiast, these tools provide features that can cover your every need.

Please follow and like us:

![]()