By Susan Li, Sr. Data Scientist

Doc2vec is an NLP tool for representing documents as a vector and is a generalizing of the word2vec method.

In order to understand doc2vec, it is advisable to understand word2vec approach. However, the complete mathematical details is out of scope of this article. If you are new to word2vec and doc2vec, the following resources can help you to get start:

Using the same data set when we did Multi-Class Text Classification with Scikit-Learn, In this article, we’ll classify complaint narrative by product using doc2vec techniques in Gensim. Let’s get started!

The Data

The goal is to classify consumer finance complaints into 12 pre-defined classes. The data can be downloaded from data.gov.

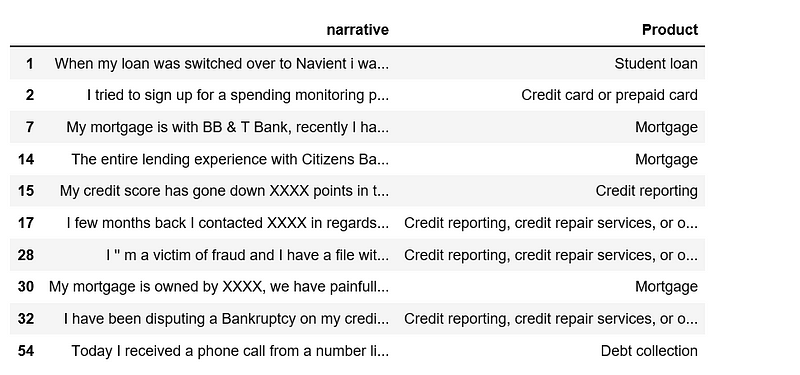

Figure 1

Figure 1

After remove null values in narrative columns, we will need to re-index the data frame.

(318718, 2)

63420212

We have over 63 million words, it is a relatively large data set.

Exploring

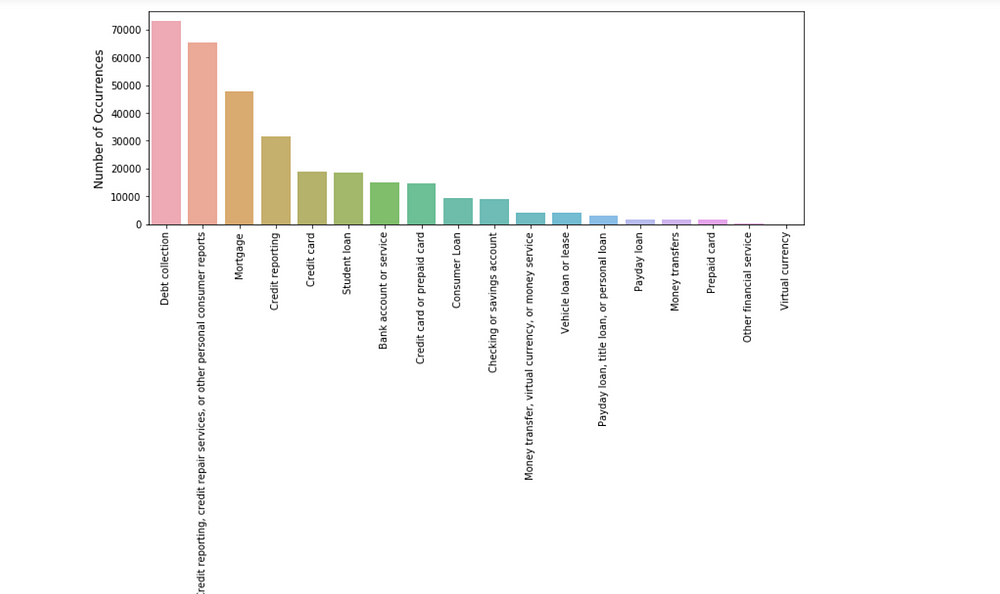

Figure 2

Figure 2

The classes are imbalanced, however, a naive classifier that predicts everything to be Debt collection will only achieve over 20% accuracy.

Let’s have a look a few examples of complaint narrative and its associated product.

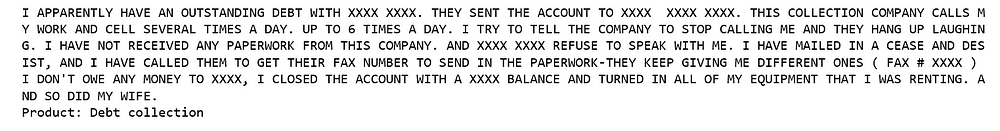

Figure 3

Figure 3

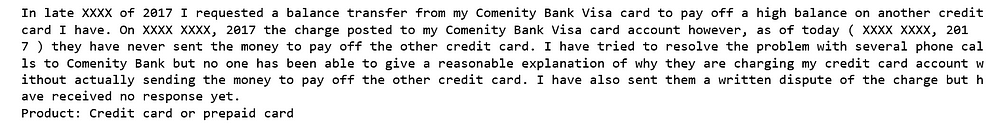

Figure 4

Figure 4

Text Preprocessing

Below we define a function to convert text to lower-case and strip punctuation/symbols from words and so on.

The following steps include train/test split of 70/30, remove stop-words and tokenize text using NLTK tokenizer. For our first try we tag every complaint narrative with its product.

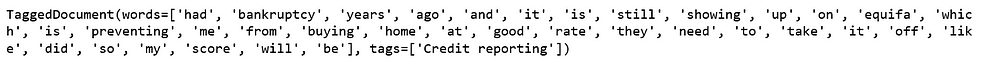

This is what a training entry looks like — an example complaint narrative tagged by “Credit reporting”.

Figure 5

Figure 5

Set-up Doc2Vec Training & Evaluation Models

First, we instantiate a doc2vec model — Distributed Bag of Words (DBOW). In the word2vec architecture, the two algorithm names are “continuous bag of words” (CBOW) and “skip-gram” (SG); in the doc2vec architecture, the corresponding algorithms are “distributed memory” (DM) and “distributed bag of words” (DBOW).

Distributed Bag of Words (DBOW)

DBOW is the doc2vec model analogous to Skip-gram model in word2vec. The paragraph vectors are obtained by training a neural network on the task of predicting a probability distribution of words in a paragraph given a randomly-sampled word from the paragraph.We will vary the following parameters:

If dm=0, distributed bag of words (PV-DBOW) is used; if dm=1,‘distributed memory’ (PV-DM) is used.

300- dimensional feature vectors.

min_count=2, ignores all words with total frequency lower than this.

negative=5 , specifies how many “noise words” should be drawn.

hs=0 , and negative is non-zero, negative sampling will be used.

sample=0 , the threshold for configuring which higher-frequency words are randomly down sampled.

workers=cores , use these many worker threads to train the model (=faster training with multicore machines).

Building a Vocabulary

Figure 6

Figure 6

Training a doc2vec model is rather straight-forward in Gensim, we initialize the model and train for 30 epochs.

Figure 7

Figure 7

Building the Final Vector Feature for the Classifier

Train the Logistic Regression Classifier.

Testing accuracy 0.6683609437751004Testing F1 score: 0.651646431211616

**Distributed Memory (DM)**

Distributed Memory (DM) acts as a memory that remembers what is missing from the current context — or as the topic of the paragraph. While the word vectors represent the concept of a word, the document vector intends to represent the concept of a document. We again instantiate a Doc2Vec model with a vector size with 300 words and iterating over the training corpus 30 times.

Figure 8

Figure 8

Figure 9

Figure 9

Train the Logistic Regression Classifier

Testing accuracy 0.47498326639892907Testing F1 score: 0.4445833078167434

Model Pairing

According to Gensim doc2vec tutorial on the IMDB sentiment data set, combining a paragraph vector from Distributed Bag of Words (DBOW) and Distributed Memory (DM) improves performance. We will follow, pairing the models together for evaluation.

First, we delete temporary training data to free up RAM.

Concatenate two models.

Building feature vectors.

Train the Logistic Regression

Testing accuracy 0.6778572623828648Testing F1 score: 0.664561533967402

The result improved by 1%.

For this article, I used training set to train doc2vec, however, in Gensim’s tutorial, the whole data set was used for training, I tried that approach, using the whole data set to train doc2vec classifier for our consumer complaint classification, I was able to achieve 70% accuracy. You can find that notebookhere, it is a little different approach.

The Jupyter notebook for the above analysis can be found on Github. I look forward to hearing any questions.

Bio: Susan Li is changing the world, one article at a time. She is a Sr. Data Scientist, located in Toronto, Canada.

Original. Reposted with permission.

Related: