Probabilistic Programming versus Machine Learning

In the past ten years, we’ve seen an explosion in Machine Learning applications, these applications have been particularly successful in search, e-commerce, advertising, social media and other verticals. These applications have been particularly focused on predictive accuracy and often involve large amounts of data — sometimes in the region of terabytes — in fact this drove a lot of innovation at the Tech giants such as Netflix, Amazon, Facebook and Google.

Fundamentally though these models — often are ‘black boxes’ and are not easily understood by observers, in applications such as churn modelling or building targeted advertising models — it doesn’t matter so much ‘how’ the model works, only that it does work. Another limitation in the ‘industrial machine learning’ case is that it involves collecting lots and lots of data. You need millions of active users on your service to justify building an advertising model for example.

These limitations make it difficult or impossible to make models that work with only a small amount of data and leverage domain-specific expertise. They also adversely affect models in dangerous or legally complicated contexts such as health or insurance where models that yield predictions must come with confidence that allow one to assess risk. For example it’s important to know the uncertainty estimates when predicting likelihood of a patient having a disease, or understanding how exposed a portfolio is to a loss in say banking or insurance.

If we go beyond these limitations we open the door to new kinds of products and analyses, and is the subject of this article. The solution is a statistical technique called Bayesian inference. This technique begins with our stating prior beliefs about the system being modelled, allowing us to encode expert opinion and domain-specific knowledge into our system. These beliefs are combined with data to constrain the details of the model. Then, when used to make a prediction, the model doesn’t give one answer, but rather a distribution of likely answers, allowing us to assess risks.

Bayesian inference has long been a method of choice in academic science for just those reasons: it natively incorporates the idea of confidence, it performs well with sparse data, and the model and results are highly interpretable and easy to understand. It is simple to use what you know about the world along with a relatively small or messy data set to predict what the world might look like in the future.

Until recently the practical engineering challenges of implementing these systems were prohibitive, and required a large amount of specialized knowledge. Recently, a new programming paradigm, probabilistic programming, has emerged. Probabilistic programming hides the complexity of Bayesian inference, making these advanced techniques accessible to a broad audience of programmers and data analysts.

The fundamental ideas of probabilities and distributions of results are the basic building blocks of models in this paradigm.

One of the most exciting and impactful and impactful innovations in modern machine learning has been deep learning for image analysis, that’s enabled previously impossible performance. Probabilistic Programming was often too specialised or involved specialised languages, and while isn’t a new capability, is likely to be as impactful as Deep Learning was.

Probabilistic Programming allows you to incorporate your domain knowledge, with your observed data. It is powerful for three reasons firstly for allowing you to incorporate domain knowledge — most machine learning frameworks don’t do that, secondly it works well with small or noisy datasets and thirdly it is interpretable.

What are the applications?

Simply put any application area where you have lots of heterogenous or noisy data, you need to incorporate domain knowledge or anywhere you need a clear understanding of your uncertainty, are areas that you can use Bayesian Statistics. From discussions with experts some of the areas that have seen early adoption have been e-commerce, insurance, finance and healthcare.

Source: https://www.psychologyinaction.org/psychology-in-action-1/2012/10/22/bayes-rule-and-bomb-threats

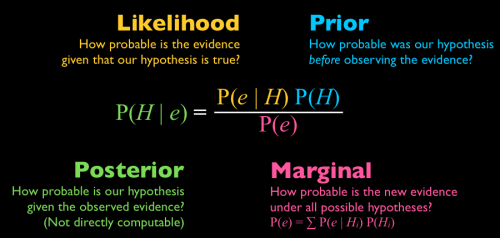

The power of Bayes’ rule stems from the fact that it relates a quantity we can calculate (the likelihood that we would have observed the measured data if the hypothesis were true) to one we can use to answer arbitrary questions (the posterior probability that a hypothesis is true given the data).

Hierarchical Models

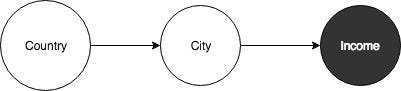

The Bayesian secret sauceis hierarchical models. We can use them to model complex systems with independencies. In such a model, we observe the behaviour of individual events, but we incorporate the belief that these events can be grouped together in a hierarchy.

This could be fore example a Real Estate pricing model or for pricing risk in commercial insurance. In both models you have apartments or shops in neighbourhoods, and neighbourhoods are in boroughs. Not all shops in a neighbourhood are alike, but they are on average pretty similar. The average shop in Peckham is different to the average shop in Deptford and very different to the average shop in Putney.

This is the hierarchical model. We can learn a lot by modelling this way, we can learn about Deptford not only from Deptford but from Peckham and Putney. This can be very useful in cases where at a particular level of the hierarchy — the data is sparse, i.e. they are no claims in a particular neighbourhood in a particular time period.

Figure: Illustrating the hierarchy for an ‘income prediction’ model.