At some point, I ended up with a model like this one:

Y~=W⋅Y\tilde{Y} = W \cdot Y Y~=W⋅Y

where W is a matrix that can be defined as (for example):

wij=1w_{ij} = 1 wij=1

if j is within the K nearest neighbours of i, 0 otherwise.

Here is the receipe I have used to create the W matrix using numpy, scipy and matplotlib for visualization.

If you are already familiar with scipy cKDTree and sparse matrix, you can directly go to the last section.

Sample data

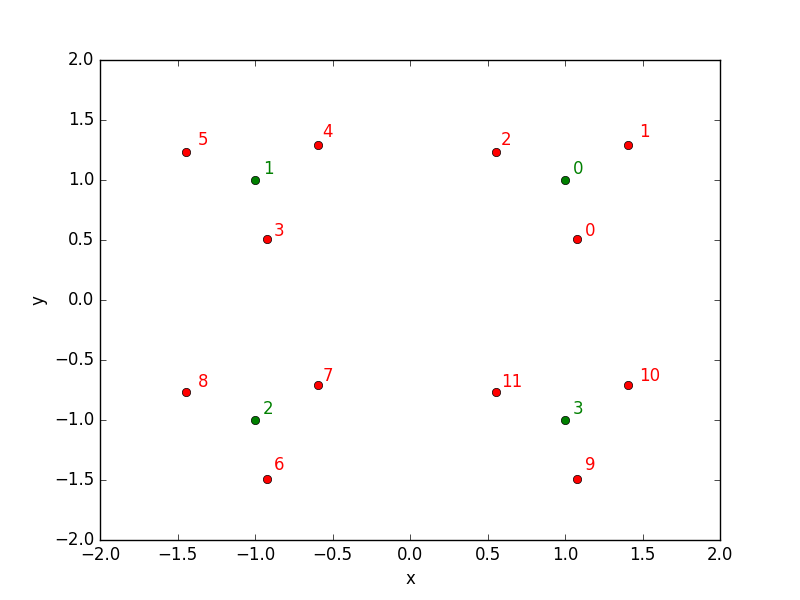

I have created a dummy dataset for the purpose of the demonstration, with sizes N=12 train samples and M=3 test samples:

1 | |

Let’s have a look at those points repartition: red points are the train data while the green points belon to the test data.

Find neighbours

Finding neighbours with the modern tools is quite straightforward. I choose here to use scipy because I will use other tools from this package later on in this post, but sklearn or other packages can also do the job. With scipy, first create a cKDTree with the train dataset:

1 | |

tree that can be queried in a second time:

1 | |

Here we asked for the three nearest neighbours in the train sample of the elements in the test sample. By default, tree.query returns both the neighbours indices and the distances involved. We’ll keep both of them.

1 | |

Let’s concentrate on the indices array.

1 | |

It is a numpy array with M (number of test samples) rows and K (number of neighbours) columns. How to transform this to the matrix we are looking for, that with our example should look like:

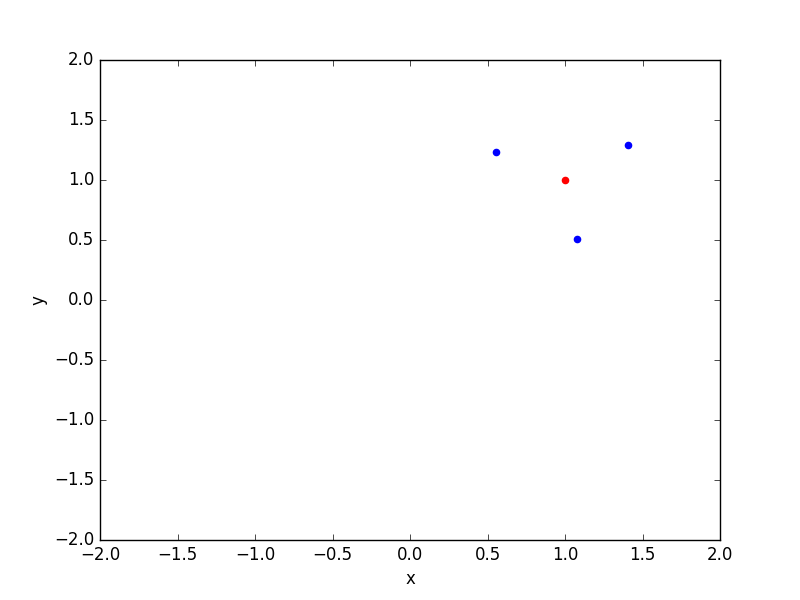

It can be interesting to see the selected neighbours on a plot:

1 | |

Ok so neighbours finding seems to work as expected! Let’s know see how to convert the indices array into a full usable matrix, that for our purpose should look like:

1 | |

because the neighbours of test observation 0 (first row) are the train observations 0, 1 and 2, the neighbours of the test observation 1 (second row) are the train observations 3, 4 and 5, and so on.

Matrix creation

At first, we would like to use numpy indexing to create our matrix like this

1 | |

but you’ll realize it doesn’t work on more than one dimension arrays.

The solution I choose is to use scipy sparse matrices, that can be created from a list of indices. For example, creating the diagonal matrix of size N=4 with sparse matrix can be written as:

1 | |

So scipy takes the first elements of i_index and j_index arrays, i and j, and puts the first element of the values array at position [i, j] in the final matrix. Or in other words, element (0, 0) value is 1, element (1, 1) is also 1… The elements not specified are all null.

If you prefer the array representation, you can look at the result with:

1 | |

where you can see the diagonal matrix.

Let’s try with a second example just to sure everything is clear. Now I want to create the inverse diagonal matrix:

1 | |

This time, the code is:

1 | |

NB: switching from sparse to dense representation is only possible when matrix size in relatively small, otherwise it will creates memory issues (the reason why sparse matrices exists!)

So, how to create this W matrix?

For the W matrix, j_index, ie columns, correspond to the neighbours indices:

1 | |

The row indices, i_index then correspond to the index in the test sample, but repeated K times to match the j_index ordering:

1 | |

That means that for first row (row index 0), there will be ones for columns 0, 1 and 2. For second row (1), there will be ones in columns 3, 4, 5… If you look again at the test/train samples positions (first figure), that’s consistent!

For the values, we can use “1”:

1 | |

or a function depending on distances for example:

1 | |

And at the end, our matrix looks like (with “1” values):

1 | |

Back to our initial problem

Now, we can compute our dot product (either with the sparse or dense version of the matrix):

1 | |

And here we are, problem solved!

Find this article and more on my website: stellasia.github.io