Artificial intelligence (AI) is a powerful and growing field, but some are still hesitant to employ it, seeing it as a black box. However, if you take a second to step outside the box (see what we did there?) when it comes to AI for business operations, the picture– and the path toward unbiased machine learning and transparency in data processes– becomes much more clear.

Our brains tell us to fear the black box of artificial intelligence (AI), but our brains often act more like black boxes themselves. When a business leader makes a decision based on intuition, people tend not to blink twice. Yet when it comes to making one based on AI, people tend to feel less comfortable (even though machine learning algorithms are rather like human intuition).

Consider Malcolm Gladwell’s Blink: The Power of Thinking Without Thinking, a New York Times best-selling novel dedicated to the power of making seemingly fast, accurate, yet seemingly unexplainable decisions. A notable example is the story of a kouros, an ancient Greek statue that was purchased by the Getty Museum of California in the 1980s.

The Getty had subjected the kouros to 14 months of scientific testing, but when art historian Federico Zeri saw the statue, he immediately knew it was a fake. Another historian felt “intuitive repulsion” when he first saw it. Neither were able to explain their deductive steps, but their hunches were right.

People are fascinated by this kind decision making, and many books and thought cultures on intuition have been released over the years. Although this fast yet accurate decision making initially seemed inexplicable, scientists have uncovered the process behind it: subconscious right brain thinking, which– much like AI– depends largely on the data it’s given.

Much like the processes of the human brain, AI is becoming more transparent. The “black box” of AI is exaggerated because of our instinctive fear of the unfamiliar; we glorify seemingly aha moments in ourselves but demonize them in machines. We’re fast to blame the machine when we hear stories like Tesla’s self-driving car crash, but these accidents are often due to human error.

The main concern of AI is that there’s undetected bias in the algorithm, but bias comes from the data that the machine is given, and we can see and analyze that data. It’s much easier to minimize the bias in this data than to unravel all the past experiences and potential biases of a human.

Furthermore, many interfaces nowadays have built in checking points which keep you in the loop by allowing you to assess the validity of a step in model construction.

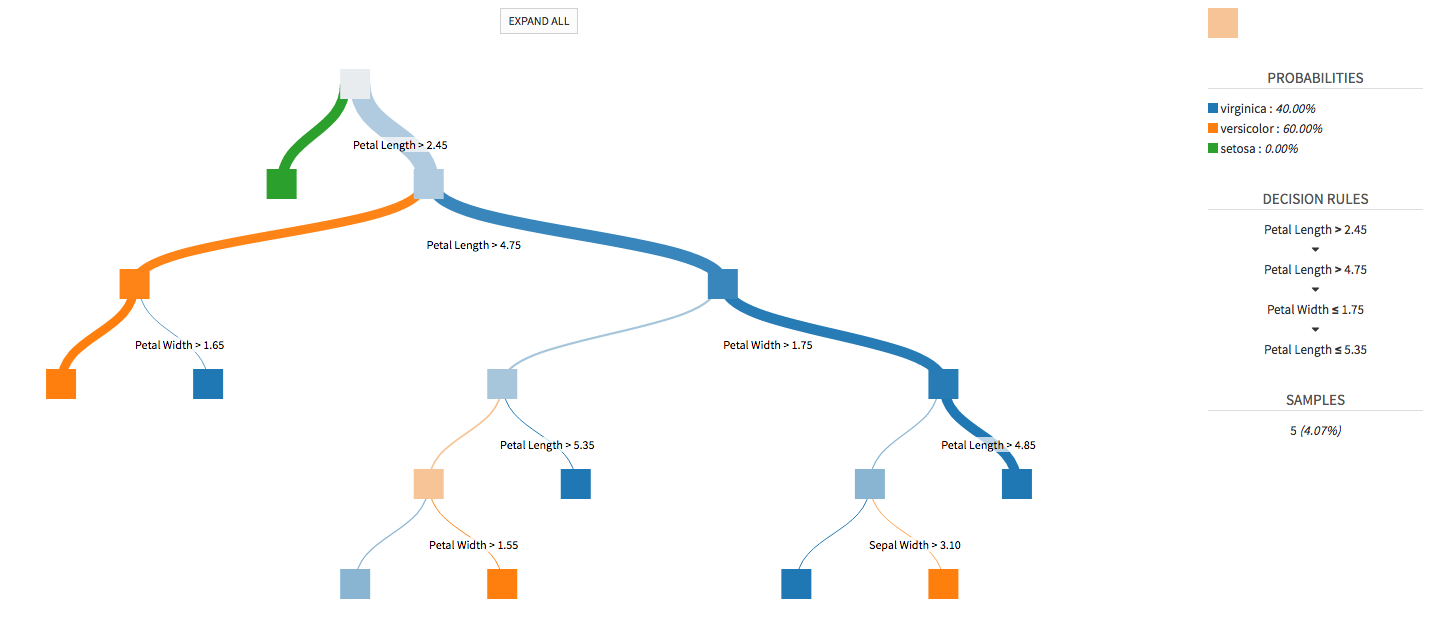

For example, Dataiku’s smart engine automatically suggests certain procedures but lets you override any decisions. The system provides clear visual analyses and metrics to help you understand the algorithm’s reasoning. Machine learning visualizations can help you understand models even further.

- A random forest machine learning model as depicted in Dataiku*

Other strides are also being made to better understand AI: neuroscientist Naftali Tishby has developed an information bottleneck theory which cracks open the black box of deep learning, Google tells you why you’re seeing certain ads (and let you adjust the settings), and companies like Nvidia are making their self-driving car technology more transparent.

So it’s not just about being more transparent inside the business by using platforms that allow you to see exactly what data is being used where, how it’s being manipulated, etc., but also about external communication and transparency.

AI has made great bounds in the last few years (and so have the efforts aimed at understanding it). With powerful applications in so many industries, we should be keeping an eye on AI (“Constant vigilance!” as Mad-Eye-Moody would say) but leveraging it operationally instead of villainizing it.

If you’re interested in enterprise AI but wary of the black box, see how using a data science platform can mitigate bias while speeding up data-driven solutions.