It has been a very productive year for NLP and ML research. Both areas continued to grow, with conferences reaching record numbers of publications. In this post I will break these numbers down a bit more, by individual authors and organisations. The statistics cover the following venues: ACL, EMNLP, NAACL, EACL, COLING, TACL, CL, CoNLL, *Sem+SemEval, NIPS, ICML, ICLR. Compared to last year, I’ve now included ICLR which has grown very rapidly in the last two years and become a highly competitive conference.

The analysis is done automatically, by crawling publication information from the conference websites and ACL Anthology. Author names are usually listed in the proceedings and easily extractable, however the organisation names are more tricky and need to be extracted straight from the PDFs. I’ve created a number of rules to map together alternative names and misspellings, but let me know if you notice any errors.

Venues

First, let’s look at different publication venues between 2012-2017. NIPS is clearly heading off the charts, with 677 publications this year. Most other venues are also growing rapidly, with 2017 being the biggest year ever for ICML, ICLR, EMNLP, EACL and CoNLL. In contrast, TACL and CL seem to be keeping a constant number of publications per year. NAACL and COLING were notably missing from 2017, but we can look forward to both of them in 2018.

Authors

The most prolific author of 2017 is Iryna Gurevych (TU Darmstadt) with 18 papers. Lawrence Carin (Duke University) has 16 publications, with an impressive 10 papers at NIPS. Following them closely are Yue Zhang (Singapore), Yoshua Bengio (Montreal), and Hinrich Schütze (Munich).

Looking at cumulative statistics from 2012-2017, Chris Dyer (DeepMind) is at the top with an impressive lead, followed by Iryna Gurevych (TU Darmstadt) and Noah A. Smith (Washington). Lawrence Carin (Duke), Zoubin Ghahramani (Cambridge) and Pradeep K. Ravikumar (CMU) are publishing mainly in the general ML venues, while the others are balanced between NLP and ML.

Separating the publications by year shows that Chris Dyer has scaled down the publication count to a more manageable level this year, and Iryna Gurevych is developing an impressive upward trajectory.

First Authors

Now let’s look at first authors, as these are usually the people implementing the code and running the experiments. Ivan Vulić (Cambridge), Ryan Cotterell (Johns Hopkins) and Zeyuan Allen-Zhu (Microsoft Research) have all produced an impressive 6 first-author publications in 2017. They are followed by Henning Wachsmuth (Weimar), Tsendsuren Munkhdalai (Microsoft Maluuba), Jiwei Li (Stanford) and Simon S. Du (CMU).

Organisations

Looking at the publishing patterns of different organisations in 2017, Carnegie Mellon is leading the charge with 126 publications, followed by Google, Microsoft and Stanford. Universities that publish proportionally more in the general ML area compared to NLP include MIT, Columbia, Oxford, Harvard, Toronto, Princeton and Zürich. In contrast, universities and organisations that focus more on the NLP venues include Edinburgh, IBM, Peking, Washington, Johns Hopkins, Pennsylvania, CAS, Darmstadt, and Qatar.

Looking at the whole period between 2012-2017, CMU is again in the lead with Microsoft, Google and Stanford close behind.

Looking at the time series, it seems CMU, Stanford, MIT and Berkeley are on the upward trajectory in terms of publications. In contrast, the industry leaders Google, Microsoft and IBM have slightly scaled back their publication numbers.

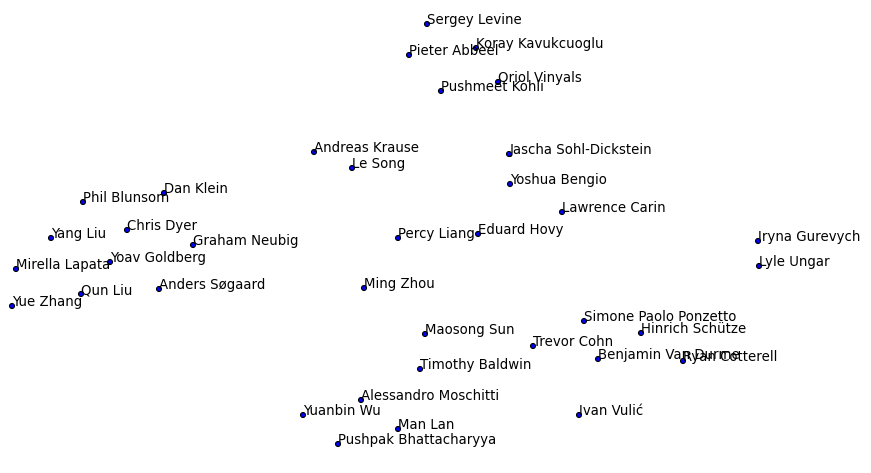

Topic Clustering

Finally, I did LDA on all the paper texts from authors that had 9 or more publications and visualised the results using tsne. In the middle is the topic of general machine learning, neural networks and adversarial learning. The top cluster covers reinforcement learning and different learning policies. The cluster on the left contains NLP applications, language modelling, parsing and machine translation. The cluster at the bottom covers information modelling and feature spaces.

That’s it for 2017. If you notice errors, let me know and I will continue updating this post.Looking forward to all the exciting research coming in 2018!