There’s been a lot of advances in image classification, mostly thanks to the convolutional neural network.

It turns out, these same networks can be turned around and applied to image generation as well. If we’ve got a bunch of images, how can we generate more like them?

A recent method, Generative Adversarial Networks, attempts to train an image generator by simultaneously training a discriminator to challenge it to improve.

To gain some intuition, think of a back-and-forth situation between a bank and a money counterfeiter. At the beginning, the fakes are easy to spot. However, as the counterfeiter keeps trying different kinds of techniques, some may get past the check. The counterfeiter then can improve his fakes towards the areas that got past the bank’s security checks.

But the bank doesn’t give up. It also keeps learning how to tell the fakes apart from real money. After a long period of back-and-forth, the competition has led the money counterfeiter to create perfect replicas.

Now, take that same situation, but let the money forger have a spy in the bank that reports back how the bank is telling fakes apart from real money.

Every time the bank comes up with a new strategy to tell apart fakes, such as using ultraviolet light, the counterfeiter knows exactly what to do to bypass it, such as replacing the material with ultraviolet marked cloth.

The second situation is essentially what a generative adversarial network does. The bank is known as a discriminator network, and in the case of images, is a convolutional neural network that assigns a probability that an image is real and not fake.

The counterfeiter is known as the generative network, and is a special kind of convolutional network that uses transpose convolutions, sometimes known as a deconvolutional network. This generative network takes in some 100 parameters of noise (sometimes known as the code) , and outputs an image accordingly.

how images are generated from deconvolutional layers. [source]

Which part was the spy? Since the discriminator was just a convolutional neural network, we can backpropogate to find the gradients of the input image. This tells us what parts of the image to change in order to yield a greater probability of being real in the eyes of the discriminator network.

All that’s left is to update the weights of our generative network with respect to these gradients, so the generative network outputs images that are more “real” than before.

The two networks are now locked in a competition. The discriminative network is constantly trying to find differences between the fake images and real images, and the generative network keeps getting closer and closer to the real deal. In the end, we’ve trained the generative network to produce images that can’t be differentiated from real images.

How well does this work? I wrote an implementation in Tensorflow, and trained it on various image sets such as CIFAR-10 and 64x64 Imagenet samples.

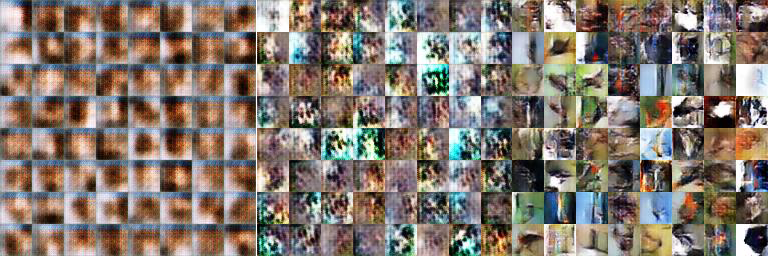

generated CIFAR images on iteration 300, 900, and 5700.

In these samples, 64 images were generated at different iterations of learning. In the beginning, all the samples are roughly the same brownish color. However, even at iteration 200, some hints of variation can be spotted. By iteration 900, different colors have emerged, although the generated images still do not resemble anything. At iteration 5700, the generated images aren’t blurry anymore, but there’s no actual objects in the images.

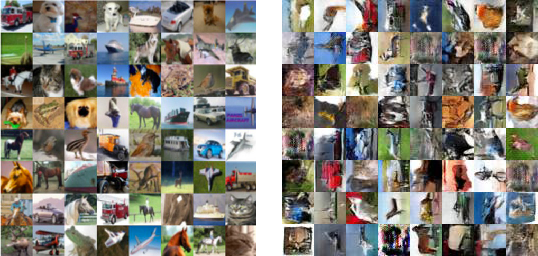

real images on the left, generated at iteration 182,000 on the right

After letting the network run for a couple hours on a GPU, I was glad to see that nothing broke. In fact, the generated images are looking pretty close to the real deal. You can see actual objects now, such as some ducks and cars.

What happens if we scale it up? CIFAR is only 32x32, so let’s try Imagenet. I downloaded a 150,000 image set from the Imagenet 2012 Challenge, and rescaled them all to 64x64.

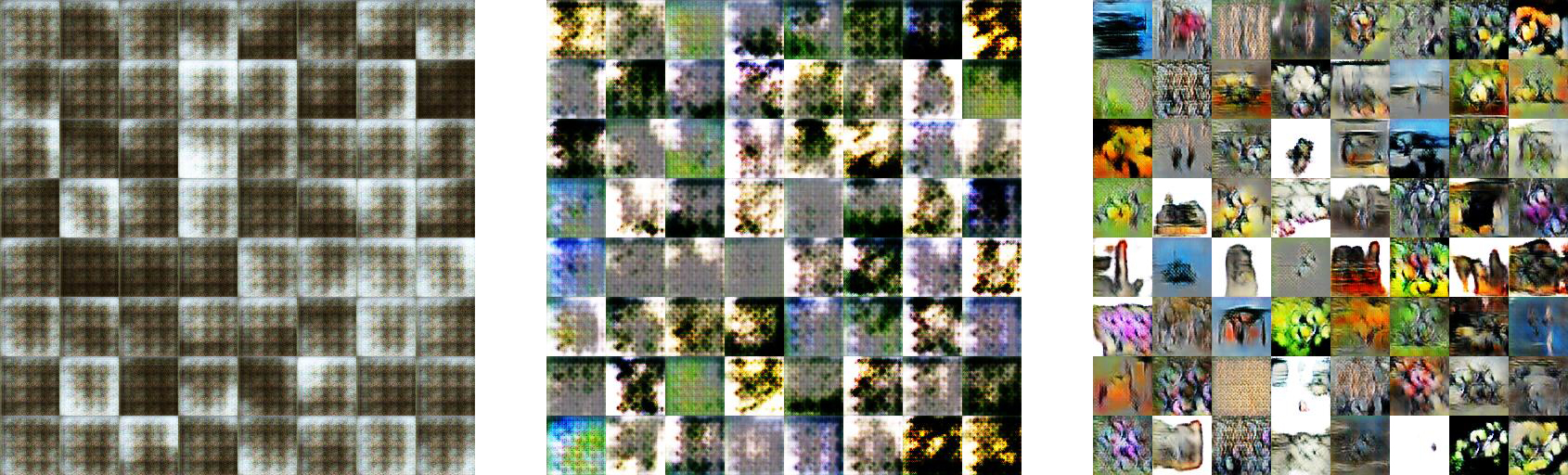

generated Imagenet images on iteration 300, 800, and 5800.

The progression here is basically the same as before. It starts out with some brown blobs, learns to add color and some lighting, and finally learns the look and feel of real images.

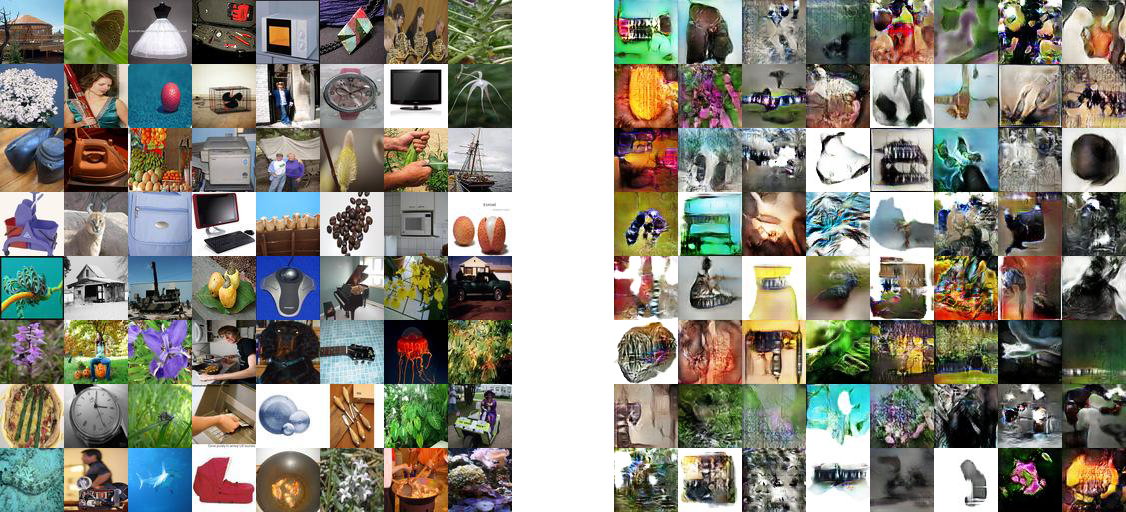

real images on the left, generated at iteration 17,800 on the right

Here’s the generated Imagenet samples at the last iteration I trained from. These definitely look a lot better than the earlier iterations. However, especially at this higher resolution, some problems become apparent.

When generating from CIFAR or Imagenet, there are no concept of classes in the generative adversarial network. The network is not learning to make images of cars and ducks, it is learning to make images that look real in general. The problem is, this results in some image that may have some combination of features from all sorts of objects, like the outline of a table but the coloring of a frog.

OpenAI’s recent blog post describes two papers that attempt to combat this: Improving GANs, and InfoGAN.

Both of these involve adding multiple objectives to the discriminator’s cost function, which is a good idea. In a simple GAN, the discriminator only has one idea of what an incredibly “real” image looks like. This leads to the generator either collapsing to only produce one image no matter what noise it starts with, or only producing images that have some resemblance of real features but no distinct uniqueness, like our Imagenet generator.

Improving GANs adds in minibatch discrimination, which is a fancy way of making sure features within various samples remain varied.

Meanwhile, InfoGAN tries to correlate the initial noise with features in the generated image, so you can do things such as adjust one of the initial noise variables to change the angle of an object.

In a plain GAN, the initial noise variables suffer from the same problem of features in a typical neural network. Although they make sense when put together, it’s hard to tell what each of them do individually.

left and middle: CIFAR-10. right: Imagenet

I wrote a quick generation script that kept all 200 initial noise values constant, except linearly adjusted one from -1 to 1. The most common result is some color becomes more prominent in a certain region of the image.

Unfortunately, this means the generator network has not learned what it means to represent an object. All it’s doing is creating an image that has features that might be present in a photograph, such as distinct color regions and shadows.

Adding some secondary objectives, such as correlating initial noise and features present, could add some more concrete value to this noise, and result in images that look less like a mix between multiple objects.

The problem of generating realistic-looking images is complex. For the simplicity of the simple generative adversial network, it’s done a pretty good job in creating images that look real, at least from a distance.

The code for training and producing these images is up on my Github.