This notebook and code are available on Github.

This notebook illustrates a Tensorflow implementation of the paper “A Neural Algorithm of Artistic Style” which is used to transfer the art style of one picture to another picture’s contents.

If you like to run this notebook, you will need to install TensorFlow, Scipy and Numpy. You will need to download the VGG-19 model. Feel free to play with the constants a bit to get a feel how the bits and pieces play together to affect the final image generated.

1 | |

Overview

We will build a model to paint a picture using a style we desire. The style of painting of one image will be transferred to the content image.

The idea is to use the filter responses from different layers of a convolutional network to build the style. Using filter responses from different layers (ranging from lower to higher) captures from low level details (strokes, points, corners) to high level details (patterns, objects, etc) which we will used to perturb the content image, which gives the final “painted” image:

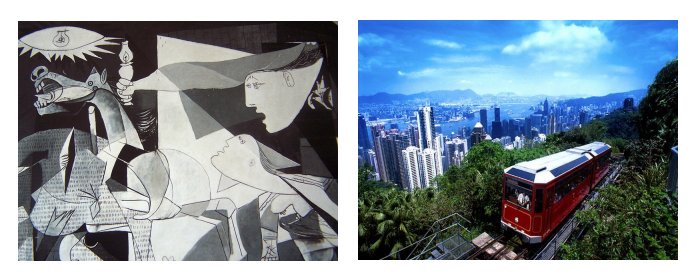

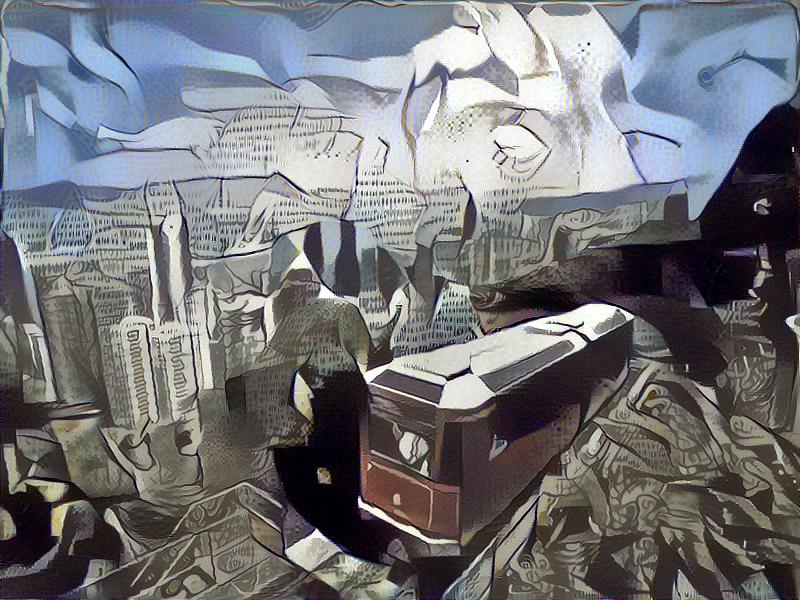

Using Guernica’s style painting and Hong Kong’s Peak Tram to yield this:

Code

We need to define come constants for the image. If you want to use another style or image, just modify the STYLE_IMAGE or CONTENT_IMAGE. For this notebook, I hardcoded the image width to be 800 x 600, but you can easily modify the code to accommodate different sizes.

1 | |

Now we define some constants which is related to the algorithm. Given that the style image and the content image remains the same, these can be tweaked to achieve different outcomes. Comments are added before each constant.

1 | |

Now we need to define the model that “paints” the image. Rather than training a completely new model from scratch, we will use a pre-trained model to achieve our purpose - called “transfer learning”.

We will use the VGG19 model. You can download the VGG19 model from here. The comments below describes the dimensions of the VGG19 model. We will replace the max pooling layers with average pooling layers as the paper suggests, and discard all fully connected layers.

1 | |

Define the equation (1) from the paper to model the content loss. We are only concerned with the “conv4_2” layer of the model.

1 | |

Define the equation (5) from the paper to model the style loss. The style loss is a multi-scale representation. It is a summation from conv1_1 (lower layer) to conv5_1 (higher layer). Intuitively, the style loss across multiple layers captures lower level features (hard strokes, points, etc) to higher level features (styles, patterns, even objects).

You can tune the weights in the STYLE_LAYERS to yield very different results. See the Bonus part at the bottom for more illustrations.

1 | |

Define the rest of the auxiliary functions.

1 | |

Create an TensorFlow session.

1 | |

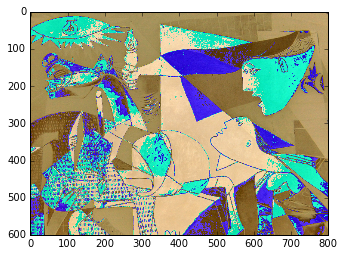

Now we load the content image “Hong Kong”. The model expects an image with MEAN_VALUES subtracted to function correctly. “load_image” already handles this. The showed image will look funny.

1 | |

<matplotlib.image.AxesImage at 0x7fd90c111240>

1 | |

style_image = load_image(STYLE_IMAGE) imshow(style_image[0])

1 | |

Build the model now.

1 | |

{‘conv1_1’: <tf.Tensor ‘Relu:0’ shape=(1, 600, 800, 64) dtype=float32>, ‘conv5_4’: <tf.Tensor ‘Relu_15:0’ shape=(1, 38, 50, 512) dtype=float32>, ‘conv2_2’: <tf.Tensor ‘Relu_3:0’ shape=(1, 300, 400, 128) dtype=float32>, ‘conv4_2’: <tf.Tensor ‘Relu_9:0’ shape=(1, 75, 100, 512) dtype=float32>, ‘avgpool1’: <tf.Tensor ‘AvgPool:0’ shape=(1, 300, 400, 64) dtype=float32>, ‘input’: <tensorflow.python.ops.variables.Variable object at 0x7fd90c0536a0>, ‘conv1_2’: <tf.Tensor ‘Relu_1:0’ shape=(1, 600, 800, 64) dtype=float32>, ‘conv3_3’: <tf.Tensor ‘Relu_6:0’ shape=(1, 150, 200, 256) dtype=float32>, ‘conv3_2’: <tf.Tensor ‘Relu_5:0’ shape=(1, 150, 200, 256) dtype=float32>, ‘avgpool3’: <tf.Tensor ‘AvgPool_2:0’ shape=(1, 75, 100, 256) dtype=float32>, ‘avgpool2’: <tf.Tensor ‘AvgPool_1:0’ shape=(1, 150, 200, 128) dtype=float32>, ‘conv3_1’: <tf.Tensor ‘Relu_4:0’ shape=(1, 150, 200, 256) dtype=float32>, ‘conv4_3’: <tf.Tensor ‘Relu_10:0’ shape=(1, 75, 100, 512) dtype=float32>, ‘conv5_3’: <tf.Tensor ‘Relu_14:0’ shape=(1, 38, 50, 512) dtype=float32>, ‘conv2_1’: <tf.Tensor ‘Relu_2:0’ shape=(1, 300, 400, 128) dtype=float32>, ‘avgpool4’: <tf.Tensor ‘AvgPool_3:0’ shape=(1, 38, 50, 512) dtype=float32>, ‘conv4_4’: <tf.Tensor ‘Relu_11:0’ shape=(1, 75, 100, 512) dtype=float32>, ‘conv4_1’: <tf.Tensor ‘Relu_8:0’ shape=(1, 75, 100, 512) dtype=float32>, ‘conv3_4’: <tf.Tensor ‘Relu_7:0’ shape=(1, 150, 200, 256) dtype=float32>, ‘conv5_2’: <tf.Tensor ‘Relu_13:0’ shape=(1, 38, 50, 512) dtype=float32>, ‘avgpool5’: <tf.Tensor ‘AvgPool_4:0’ shape=(1, 19, 25, 512) dtype=float32>, ‘conv5_1’: <tf.Tensor ‘Relu_12:0’ shape=(1, 38, 50, 512) dtype=float32>}

1 | |

<matplotlib.image.AxesImage at 0x7fd9047c0c18>

1 | |

sess.run(tf.initialize_all_variables())

1 | |

Construct style_loss using style_image.

sess.run(model[‘input’].assign(style_image)) style_loss = style_loss_func(sess, model)

1 | |

From the paper: jointly minimize the distance of a white noise image

from the content representation of the photograph in one layer of

the neywork and the style representation of the painting in a number

of layers of the CNN.

#

The content is built from one layer, while the style is from five

layers. Then we minimize the total_loss, which is the equation 7.

optimizer = tf.train.AdamOptimizer(2.0) train_step = optimizer.minimize(total_loss)

1 | |

array([[[[-38.80082321, 2.36044788, 53.06735229], [-36.208992 , -8.92279434, 52.68152237], [-44.56254196, 2.56879926, 42.36888885], …, [-21.99048233, 6.0762639 , 39.36758041], [-35.85998535, -3.56352782, 44.86796951], [-42.85255051, -6.79411459, 52.11099625]],

[[-35.10824203, -10.4971714 , 38.89696884], [-37.86809921, -5.79524469, 39.4394722 ], [-27.90998077, 1.6464256 , 52.97366714], …, [-44.19208527, -13.92263412, 36.78689194], [-39.15240097, -2.04686642, 49.2306633 ], [-35.7723732 , -12.24501419, 44.17518997]],

[[-43.50813675, -7.48234081, 48.60139465], [-34.67430878, -7.62575102, 36.58321762], [-34.54434586, -15.8774004 , 38.89173126], …, [-32.95817947, -3.49402404, 38.15805054], [-25.93852997, -14.92780209, 49.86390686], [-44.59893417, -8.43691158, 41.84837723]],

…, [[-55.29382324, -57.94036865, -47.33403397], [-47.22367859, -47.09892654, -43.59551239], [-58.79758072, -43.68718719, -52.4673996 ], …, [-51.44662857, -39.4832077 , -46.86067581], [-37.68956375, -39.0056076 , -41.35289383], [-46.67458725, -46.07319641, -42.1647644 ]],

[[-40.65664291, -39.99607086, -50.46044159], [-39.84476471, -47.29466629, -42.65992355], [-46.70065689, -56.47098923, -33.3544693 ], …, [-57.43079376, -49.95788956, -34.21024704], [-42.41124344, -44.70735168, -40.68310928], [-48.97134781, -41.0301857 , -48.75336456]],

[[-46.85499191, -52.87174606, -42.00823975], [-52.52578354, -50.29984665, -49.69490814], [-54.52784348, -39.16690063, -42.48467255], …, [-56.66353226, -34.45768356, -31.35286331], [-42.40987396, -31.03708076, -38.9402504 ], [-44.44059753, -45.45861435, -38.15160751]]]], dtype=float32)

1 | |

Number of iterations to run.

ITERATIONS = 1000 # The art.py uses 5000 iterations, and yields far more appealing results. If you can wait, use 5000.

1 | |

Iteration 0 sum : -4.70464e+06 cost: 7.69395e+10 Iteration 100 sum : -2.9841e+06 cost: 3.54706e+09 Iteration 200 sum : -2.43492e+06 cost: 1.39629e+09 Iteration 300 sum : -1.32645e+06 cost: 7.6313e+08 Iteration 400 sum : -39769.0 cost: 4.84996e+08 Iteration 500 sum : 1.18856e+06 cost: 3.54245e+08 Iteration 600 sum : 2.1015e+06 cost: 3.0398e+08 Iteration 700 sum : 3.1431e+06 cost: 2.04405e+08 Iteration 800 sum : 4.07144e+06 cost: 1.6504e+08 Iteration 900 sum : 4.9075e+06 cost: 1.34236e+08

1 | |

This is our final art for 1000 iterations. It is different the one above which I have ran for 5000 iterations. However, you can certainly generate your own painting now.

Bonus : Style Weights Illustrated

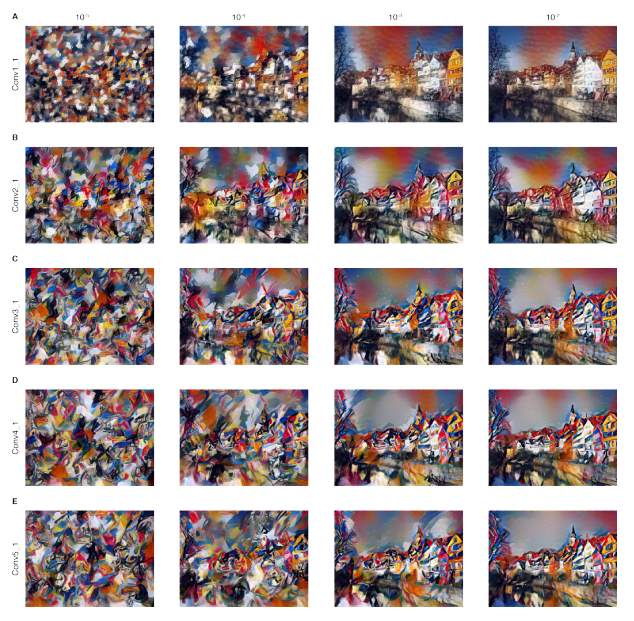

By tweaking the weights in the style layer loss function, you may be able to put more emphasis on lower level features or higher level features yielding quite a different painting. Here is what is shown in the original paper. Using this picture “Composition” as style and “Tubingen” as content:

1 | |

<matplotlib.image.AxesImage at 0x7f092e9add30>

```

The upper leftmost corner indicates a picture that puts more weight on the lower level features, and the resulting image is completely deformed and only included low level features. The lower right uses mostly the higher level features and less lower level features, and the image is less deformed and quite appealing, which is what this notebook is doing as well. Feel free to play with the weights above.