By Sciforce.

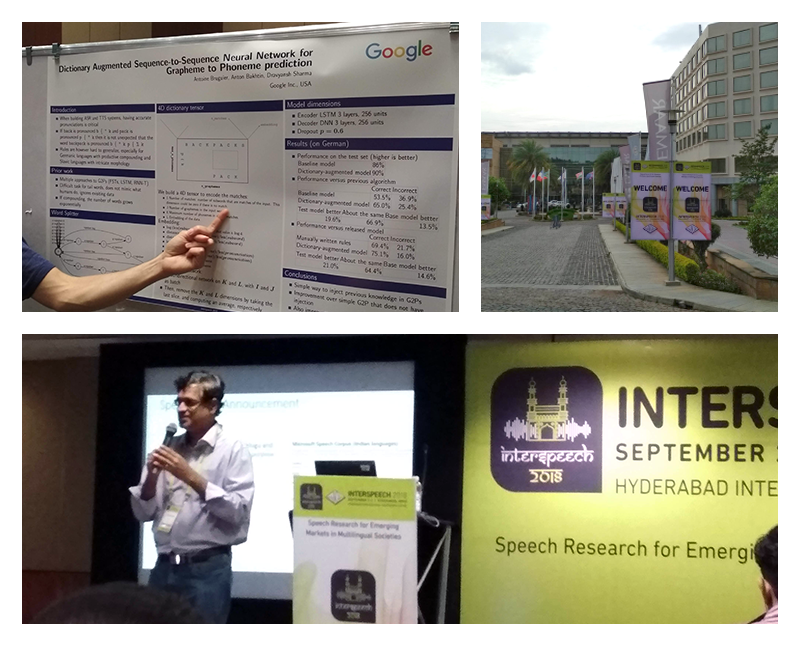

This year the Sciforce team have travelled as far as to India to one of the most important events in speech processing community, the Interspeech conference. It is a truly scientific conference, where every speech, poster or demo is accompanied by a paper published in the ISCA journal. As usual, it covered most of the speech-related topics, and even more: automatic speech recognition (ASR) and generation (TTS), voice conversion and denoising, speaker verification and diarization, spoken dialogue systems, languages education and healthcare-related topics.

At a glance

-

This year’s keynote was “Speech research for emerging markets in multilingual society”. Together with several sessions on providing speech technologies to cover dozens of languages spoken in India, it shows an important shift from focusing on several well-researched languages on the developed market to a broader coverage.

-

Quite in line with that, while ASR for endangered languages is still a matter of academic research and funded by non-profit organizations, ASR for under-resourced languages with a sufficient amount of speakers is found attractive for industry.

-

End-to-end (attention-based) models gradually become the mainstream speech recognition. More traditional hybrid HMM+DNN models (mostly, based onKalditoolkit) remain nevertheless popular and provide state-of art results in many tasks.

-

Speech technologies in education are getting momentum, and healthcare-related speech technologies have already formed a big domain.

-

Though Interspeech is a speech processing conference, there are many overlappings with other areas of ML, such as Natural Language Processing (NLP), or video and image processing. Spoken language understanding, multimodal systems and dialogue agents were widely presented.

-

The conference covered some fundamental theoretical aspects of machine learning, which can be equally applied to speech as well as to computer vision and other areas.

-

More and more researchers share their code, so that their results could be checked and reproduced.

-

Ultimately, ready-to-use open-source solutions were presented, e.g.HALEF,S4D.

Our Top

At the conference, we focused on topics related to application of speech technologies to language education and on more general topics such as automatic speech recognition, learning speech signal representations, etc. We also visited two pre-conference tutorials — End-To-End Models for ASR and Information Theory of Deep Learning.

Tutorial 1: End-To-End Models for Automatic Speech Recognition

This tutorial given by Rohit Prabhavalkar and Tara Sainath from Google Inc., USA. was undeniably one of the most valuable events of the conference bringing new ideas and uncovering some important details even for quite experienced specialists.

Conventional pipelines involve several separately trained components such as an acoustic model, a pronunciation model, a language model, and 2nd-pass rescoring for ASR. In contrast, end-to-end models are typically sequence-to-sequence models that output words or graphemes directly and simplify the pipeline greatly.

The tutorial presented several end-to-end ASR models, starting with the first model called Connectionist Temporal Network (CTC) which receives acoustic data at the input, passes it through the encoder and outputs softmax representing the distribution over characters or (sub)word and its development RNN-T which incorporates a language model component trained jointly.

Yet, most of state-of-art end-to-end solutions use attention-based models. Attention mechanism summarizes encoder features relevant to predict next label. Most of the modern architectures are improvements on Listen, Attend and Spell (LAS) proposed by Chan and Chorowski in 2015. The LAS model consists of an encoder (similar to an acoustic model), which has pyramidal structure to reduce the time step, an attention (alignment) model, and a decoder — an analogue to a pronunciation or a language model. LAS offers good results without an additional language model, and is able to recognize out-of-vocabulary words. However, to decrease word error rate (WER), special techniques are used, such as shallow fusion, which is integration of separately trained LM and is used as input to decoder and as additional input to final output layer.

Tutorial 2: Information theory approach to Deep Learning

One of the most noticeable events of this year’s Intespeech was a tutorial by Naftali Tishby from Hebrew University of Jerusalem. Although the author first proposed this approach more than a decade ago and it is familiar to the community, and this tutorial was a Skype teleconference, there were no free seats at the venue.

Naftali Tishby started with overview of deep learning models and information theory. He covered information plane based analysis, described learning dynamics of neural networks and other models, and, finally, showed an impact of multiple layers on learning process.

Although the tutorial is highly theoretical and requires mathematical background to understand, deep learning practitioner can take away the following useful tips:

-

Information plane is a useful tool for analyzing behavior of complex DNNs.

-

If a model can be presented as a Markov chain, it would likely have predefined learning dynamics in the information plane.

-

There are two learning phases: capturing inputs-targets relation and representation compression.

Though his research covers a very small subset of modern neural network architectures, N. Tishby’s theory spawns lots of discussions in the deep learning community.

Speech processing and education

There are two major speech-related tasks for foreign language learners: computer-aided language learning (CALL) and computer-aided pronunciation training (CAPT). The main difference is that CALL applications are focused on vocabulary, grammar, and semantics checking, and CAPT applications do pronunciation assessment.

Most of the CALL solutions use ASR at their back-end. However, a conventional ASR system trained on native speech is not suitable for this task, due to students’ accent, language errors, lots of incorrect words or out-of-vocabulary words (OOV). Therefore, techniques from Natural Language Processing (NLP) and Natural Language Understanding (NLU) should be applied to determine the meaning of the student’s utterance and detect errors. Most of the systems are trained on non-native speech corpora with a fixed native language, using in-house corpora.

Most of CAPT papers use ASR models in a specific way, for forced alignment. A student’s waveform is aligned in time with the textual prompt, and the confidence score for each phone is used to estimate the quality of pronunciation of this phone by the user. However, some novel approaches were presented, where, for example, relative distance between different phones is used to assess student’s language proficiency, and involves end-to-end training.

Bonus: CALL shared task is an annual competition based on a real-world task. Participants from both academia and industry presented their solutions which were benchmarked on an opened dataset consisting of two parts: speech processing and text processing. They contain German prompts and English answers by a student. Language (vocabulary, grammar) and meaning of the responses have been assessed independently by human experts. The task is open-ended, i.e. there are multiple ways to say the same thing, and only a few of them are specified in the dataset.

ASR

This year, A. Zeyer and colleagues presented a new ASR model showing the best ever results on LibriSpeech corpus (1000 hours of clean English speech) — the reported WER is 3.82%. This is another example of an end-to-end model, an improvement of LAS. It uses special Byte-Pair-Encoding subword units, having 10K subword targets in total.

For a smaller English corpus — Switchboard (300 hours of telephone-quality speech) the best result is shown by a modification of Lattice-free MMI (Maximum Mutual Information) approach by H. Hadian et. al. — 7.5% WER.

Despite the success of end-to-end neural network approaches, one of their main shortcomings is that they need huge databases for their training. For endangered languages with few native speakers, creating such database is close to impossible. This year, traditionally, there was a session on ASR for such languages. The most popular approach for this task is transfer learning, i. e. training a model on well supported language(s) and retraining on an underresourced one. Unsupervised (sub)word units discovery is another widely used approach.

A bit different task is ASR for under-resourced languages. In this case, a relatively small dataset (dozens of hours) is usually available. This year, Microsoft organized a challenge on Indian languages ASR, and even shared adataset, containing circa 40 hours of training material and 5 hours of test dataset in Tamil, Telugu and Gujarati. The winner is a system named “BUT Jilebi” that uses Kaldi-based ASR with LF-MMI objective, speaker adaptation using feature-space maximum likelihood linear regression (fMMLR and data augmentation with speed perturbation.

Other topics

This year we have seen many presentations on voice conversion. For example, trained on VCTK corpus (40 hours of native English speech), a voice conversion tool computes the speaker embedding or i-vector of a new target speaker using only a single target speaker’s utterance. The results sound a bit robotic, yet the target voice is recognizable.

Another interesting approach for word-level speech processing is Speech2Vec. It resembles Word2Vec widely used in the field of natural language processing, and lets learn fixed-length embeddings for variable length word speech segments. Under the hood, Speech2Vec uses encoder-decoder model with attention.

Other topics included speech synthesis manners discrimination, unsupervised phone recognition and many more.

Conclusion

With the development of Deep Learning, Interspeech conference, originally intended for the speech processing and DSP community, gradually transforms to a broader platform for communication of machine learning scientists irrespective of their field of interest. It becomes the place to share common ideas across different areas of machine learning, and to inspire multi-modal solutions where speech processing occurs together (and sometimes in the same pipeline) with video and natural language processing. Sharing the ideas between fields, undoubtedly, speeds up the progress; and this year’s Interspeech conference has shown several examples of such sharing.

Further reading for the fellow geeks and crazy scientists

Tutorial 1:

-

A. Graves, S. Fernández, F. Gomez, J. Schmidhuber. Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks.ICML 2006. [pdf]

-

A. Graves. Sequence Transduction with Recurrent Neural Networks. Representation Learning Workshop, ICML 2012. [pdf]

-

W. Chan, N. Jaitly, Q. V. Le, O. Vinyals. Listen, Attend and Spell.2015. [pdf]

-

J. Chorowski, D. Bahdanau, D. Serdyuk, K. Cho, Y. Bengio. Attention-Based Models for Speech Recognition.2015. [pdf]

-

G. Pundak, T. Sainath, R. Prabhavalkar, A. Kannan, Ding Zhao.

Deep context: end-to-end contextual speech recognition. 2018. [pdf]

Tutorial 2:

- N. Tishby, F. Pereira, W. Bialek. The Information Bottleneck Method.Invited paper, in “Proceedings of 37th Annual Allerton Conference on Communication, Control and Computing”, pages 368–377, (1999). [pdf]

Speech processing and education:

-

Evanini, K., Timpe-Laughlin, V., Tsuprun, E., Blood, I., Lee, J., Bruno, J., Ramanarayanan, V., Lange, P., Suendermann-Oeft, D. Game-based Spoken Dialog Language Learning Applications for Young Students.Proc. Interspeech 2018, 548–549. [pdf]

-

Nguyen, H., Chen, L., Prieto, R., Wang, C., Liu, Y. Liulishuo’s System for the Spoken CALL Shared Task 2018.Proc. Interspeech 2018, 2364–2368. [pdf]

-

Tu, M., Grabek, A., Liss, J., Berisha, V. Investigating the Role of L1 in Automatic Pronunciation Evaluation of L2 Speech.Proc. Interspeech 2018, 1636–1640 [pdf]

-

Kyriakopoulos, K., Knill, K., Gales, M. A Deep Learning Approach to Assessing Non-native Pronunciation of English Using Phone Distances.Proc. Interspeech 2018, 1626–1630 [pdf]

ASR:

-

Zeyer, A., Irie, K., Schlüter, R., Ney, H.Improved Training of End-to-end Attention Models for Speech Recognition.Proc. Interspeech 2018, 7–11 [pdf]

-

Hadian, H., Sameti, H., Povey, D., Khudanpur, S. End-to-end Speech Recognition Using Lattice-free MMI.Proc. Interspeech 2018, 12–16 [pdf]

-

He, D., Lim, B.P., Yang, X., Hasegawa-Johnson, M., Chen, D. Improved ASR for Under-resourced Languages through Multi-task Learning with Acoustic Landmarks.Proc. Interspeech 2018, 2618–2622 [pdf]

-

Chen, W., Hasegawa-Johnson, M., Chen, N.F. Topic and Keyword Identification for Low-resourced Speech Using Cross-Language Transfer Learning.Proc. Interspeech 2018, 2047–2051 [pdf]

-

Hermann, E., Goldwater, S. Multilingual Bottleneck Features for Subword Modeling in Zero-resource Languages. Proc.Interspeech 2018 [pdf]

-

Feng, S., Lee, T. Exploiting Speaker and Phonetic Diversity of Mismatched Language Resources for Unsupervised Subword Modeling.Proc. Interspeech 2018, 2673–2677 [pdf]

-

Godard, P., Boito, M.Z., Ondel, L., Berard, A., Yvon, F., Villavicencio, A., Besacier, L. Unsupervised Word Segmentation from Speech with Attention.Proc. Interspeech 2018, 2678–2682 [pdf]

-

Glarner, T., Hanebrink, P., Ebbers, J., Haeb-Umbach, R. Full Bayesian Hidden Markov Model Variational Autoencoder for Acoustic Unit Discovery.Proc. Interspeech 2018, 2688–2692 [pdf]

-

Holzenberger, N., Du, M., Karadayi, J., Riad, R., Dupoux, E. Learning Word Embeddings: Unsupervised Methods for Fixed-size Representations of Variable-length Speech Segments.Proc. Interspeech 2018, 2683–2687 [pdf]

-

Pulugundla, B., Baskar, M.K., Kesiraju, S., Egorova, E., Karafiát, M., Burget, L., Černocký, J. BUT System for Low Resource Indian Language ASR.Proc. Interspeech 2018, 3182–3186 [pdf]

Other topics:

-

Liu, S., Zhong, J., Sun, L., Wu, X., Liu, X., Meng, H. Voice Conversion Across Arbitrary Speakers Based on a Single Target-Speaker Utterance.Proc. Interspeech 2018, 496–500 [pdf]

-

Chung, Y., Glass, J. Speech2Vec: A Sequence-to-Sequence Framework for Learning Word Embeddings from Speech.Proc. Interspeech 2018, 811–815 [pdf]

-

Lee, J.Y., Cheon, S.J., Choi, B.J., Kim, N.S., Song, E. Acoustic Modeling Using Adversarially Trained Variational Recurrent Neural Network for Speech Synthesis.Proc. Interspeech 2018, 917–921 [pdf]

-

Tjandra, A., Sakti, S., Nakamura, S. Machine Speech Chain with One-shot Speaker Adaptation.Proc. Interspeech 2018, 887–891 [pdf]

-

Renkens, V., van Hamme, H. Capsule Networks for Low Resource Spoken Language Understanding.Proc. Interspeech 2018, 601–605 [pdf]

-

Prasad, R., Yegnanarayana, B. Identification and Classification of Fricatives in Speech Using Zero Time Windowing Method.Proc. Interspeech 2018, 187–191 [pdf]

-

Liu, D., Chen, K., Lee, H., Lee, L. Completely Unsupervised Phoneme Recognition by Adversarially Learning Mapping Relationships from Audio Embeddings.Proc. Interspeech 2018, 3748–3752.

Original. Reposted with permission.

Resources:

Related: